chaos: Why is the boot server unable to read the startup script?

Alright, I think it's about time to now to once and for all solve the problem with the boot server being unable to read the startup script from the file system. This has been broken for a while and I now intend to solve this bug.

(Warning: as can be seen in the "read time" above, this is a long blog post. It is the result of a debug session that spanned over several nights, spread over multiple weeks, and the whole thing took much, much longer to complete than I had hoped or anticipated. Be forewarned; this will probably take some time to read if you read it all. I was thinking of splitting it up into multiple posts, but I decided not to do it for now; it all forms a continuum in a sense.)

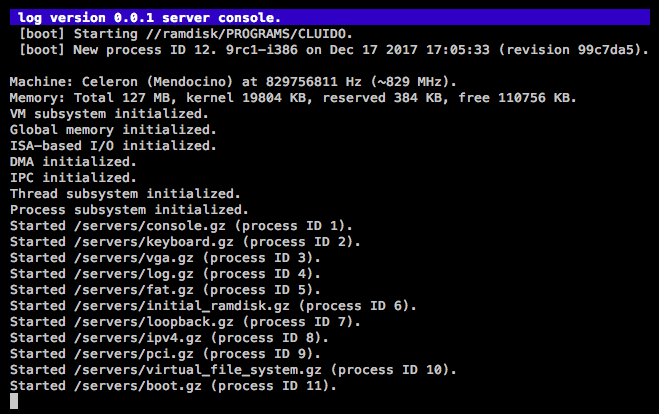

The current state when booting

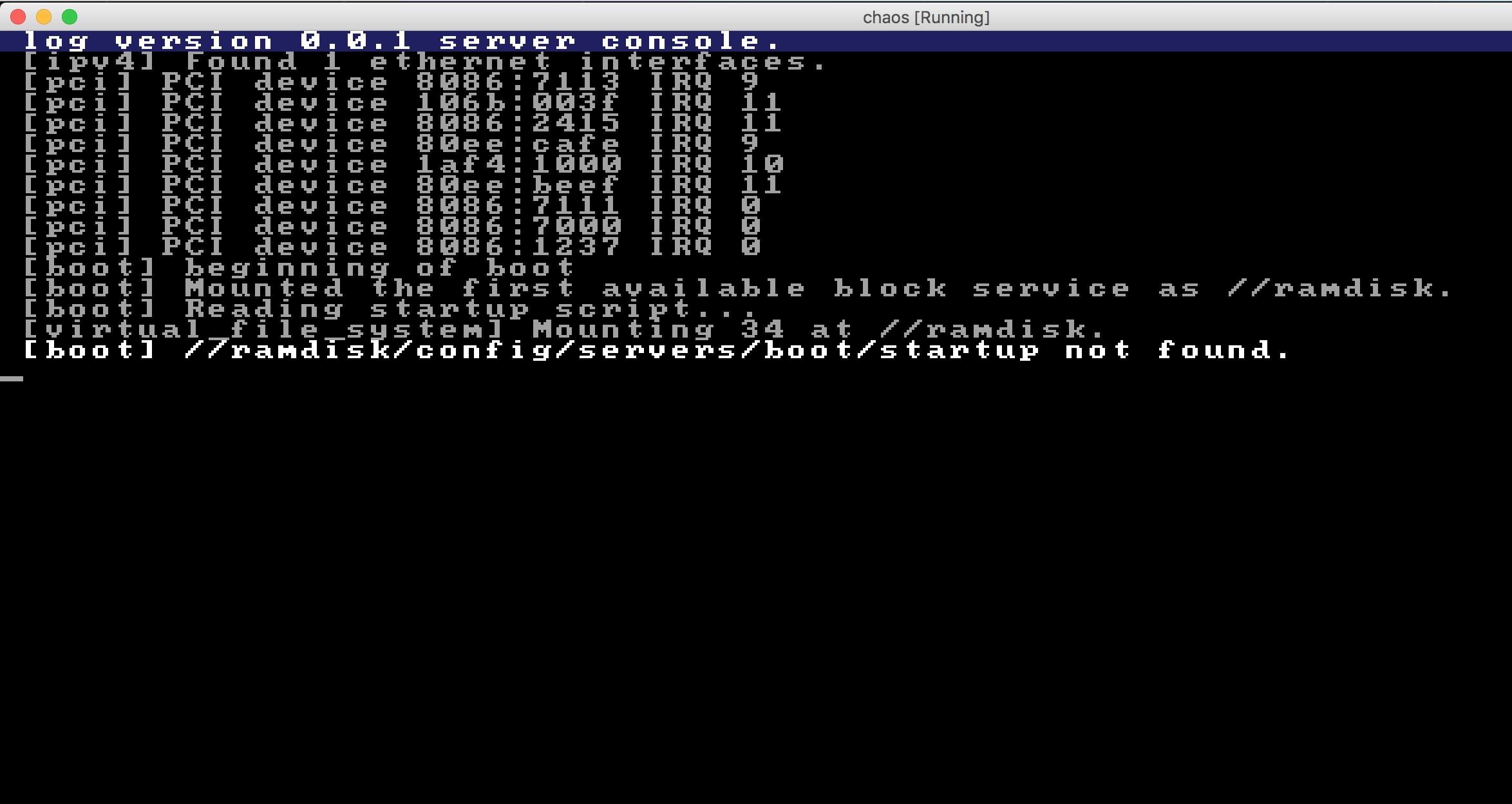

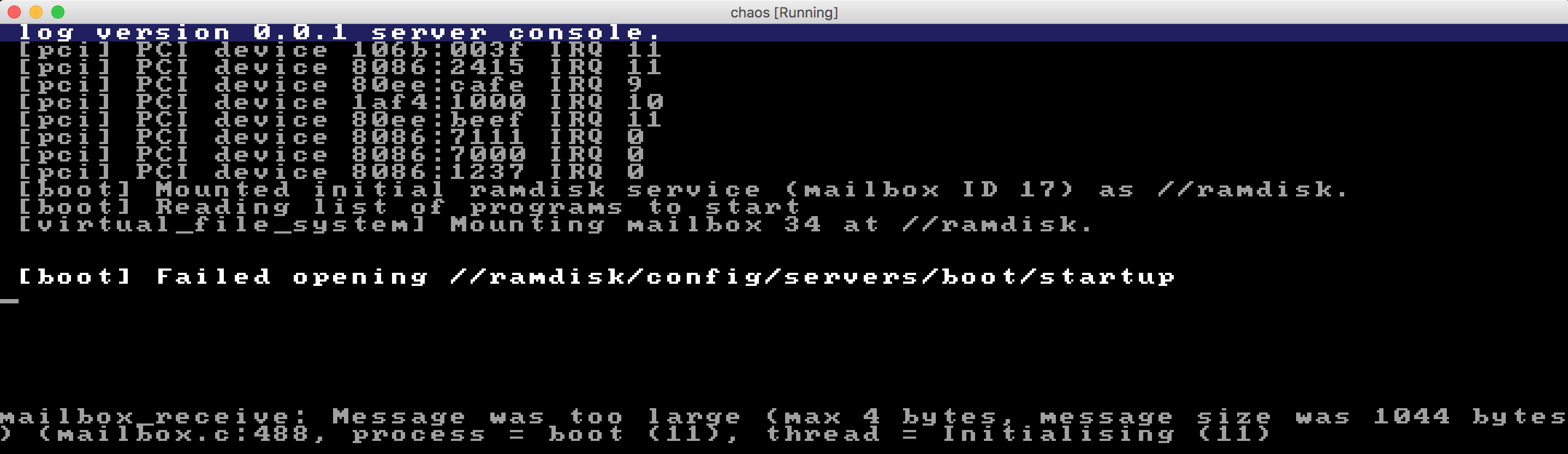

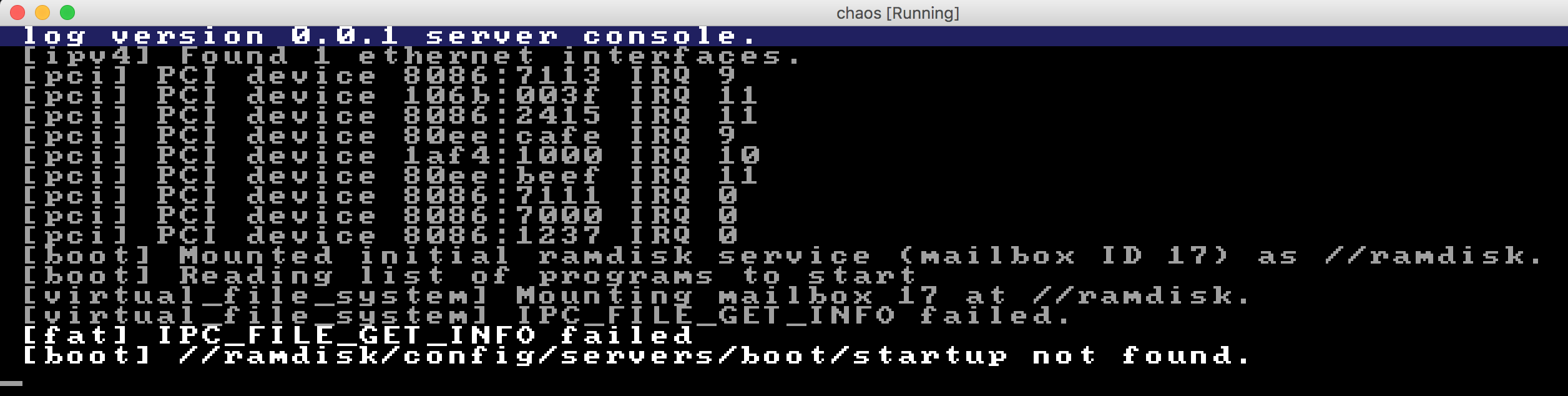

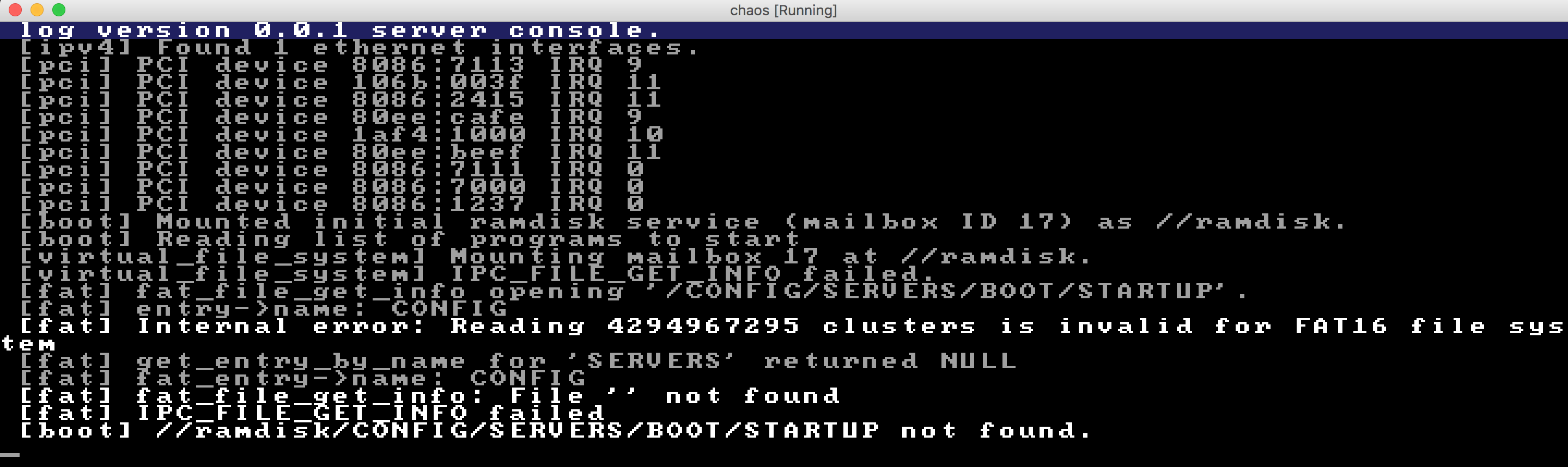

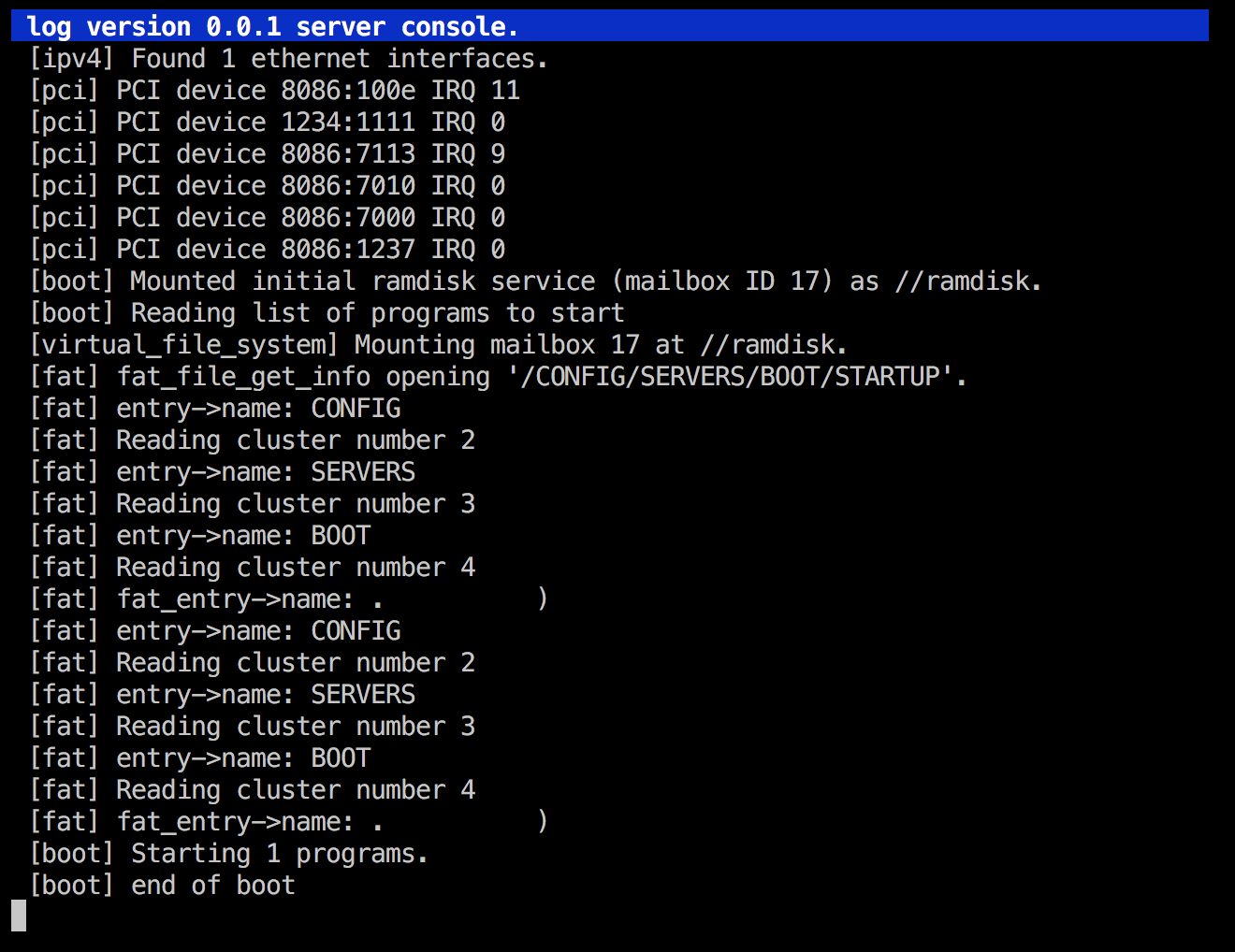

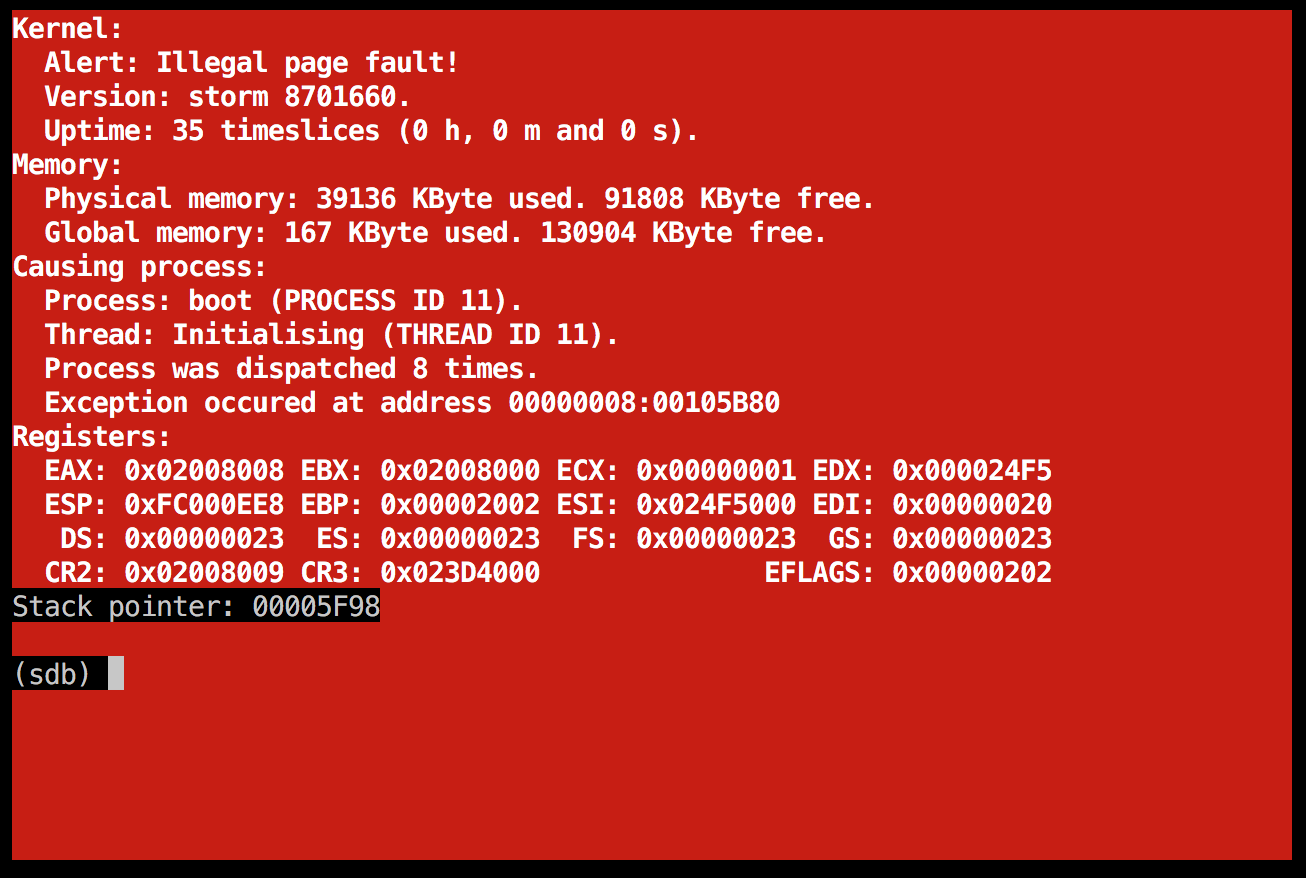

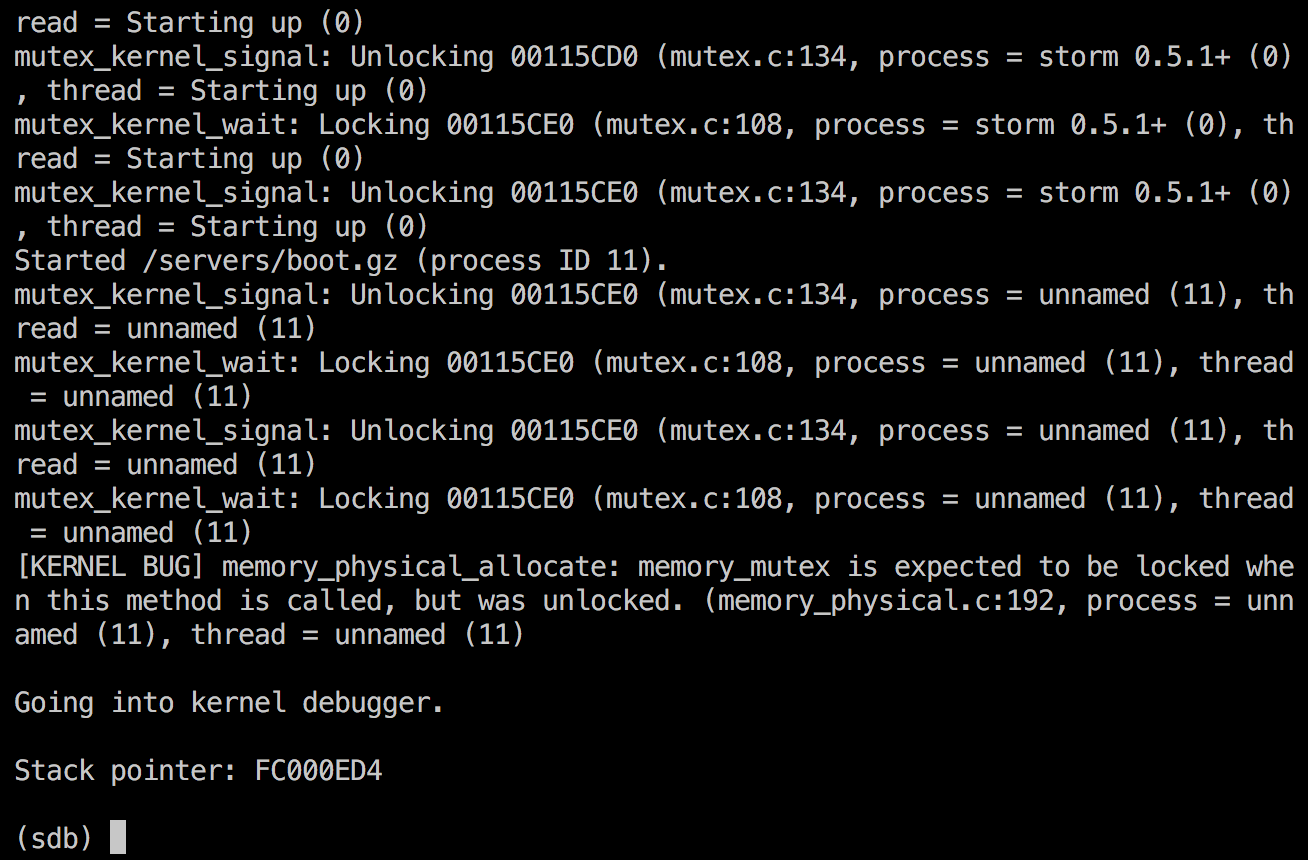

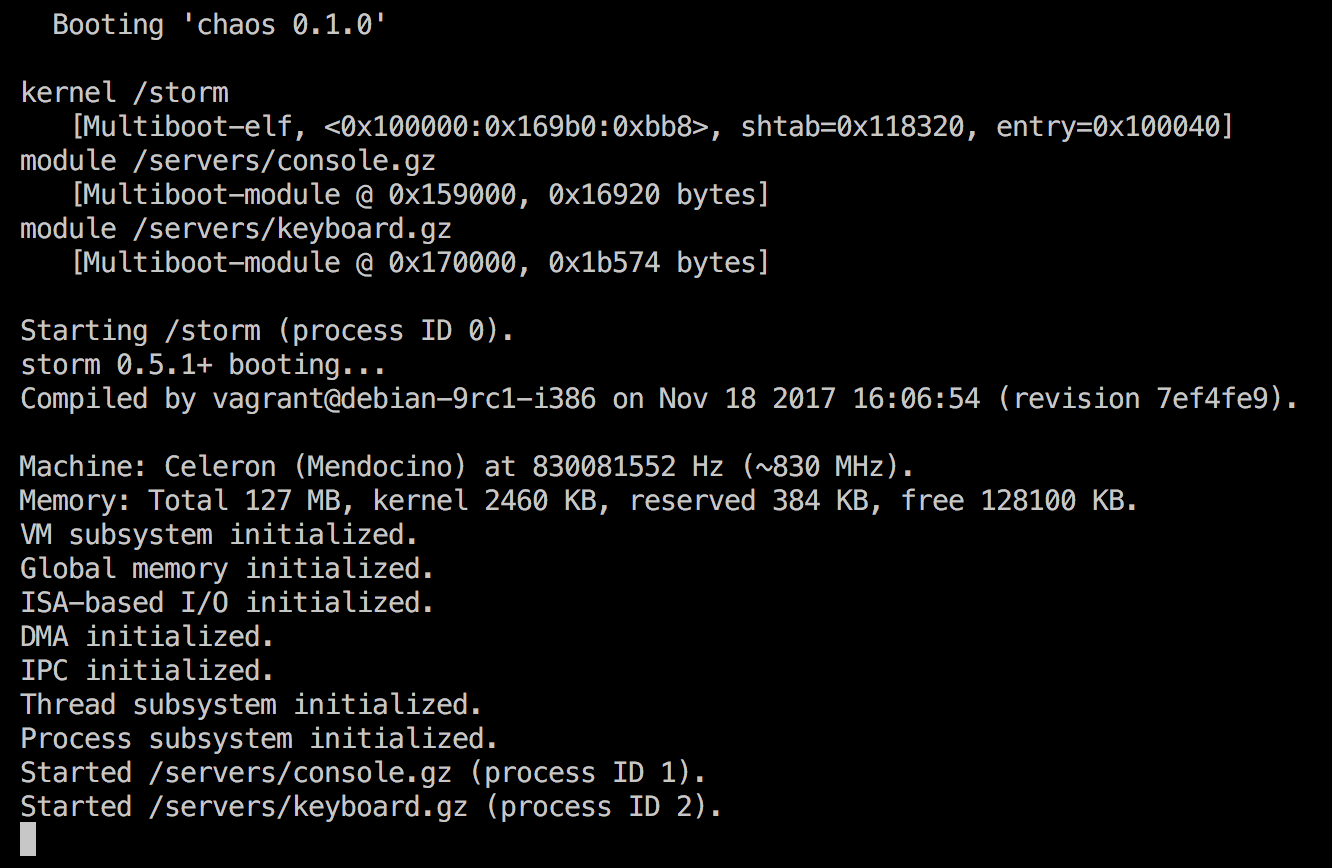

At the moment, here is what the system looks like on startup:

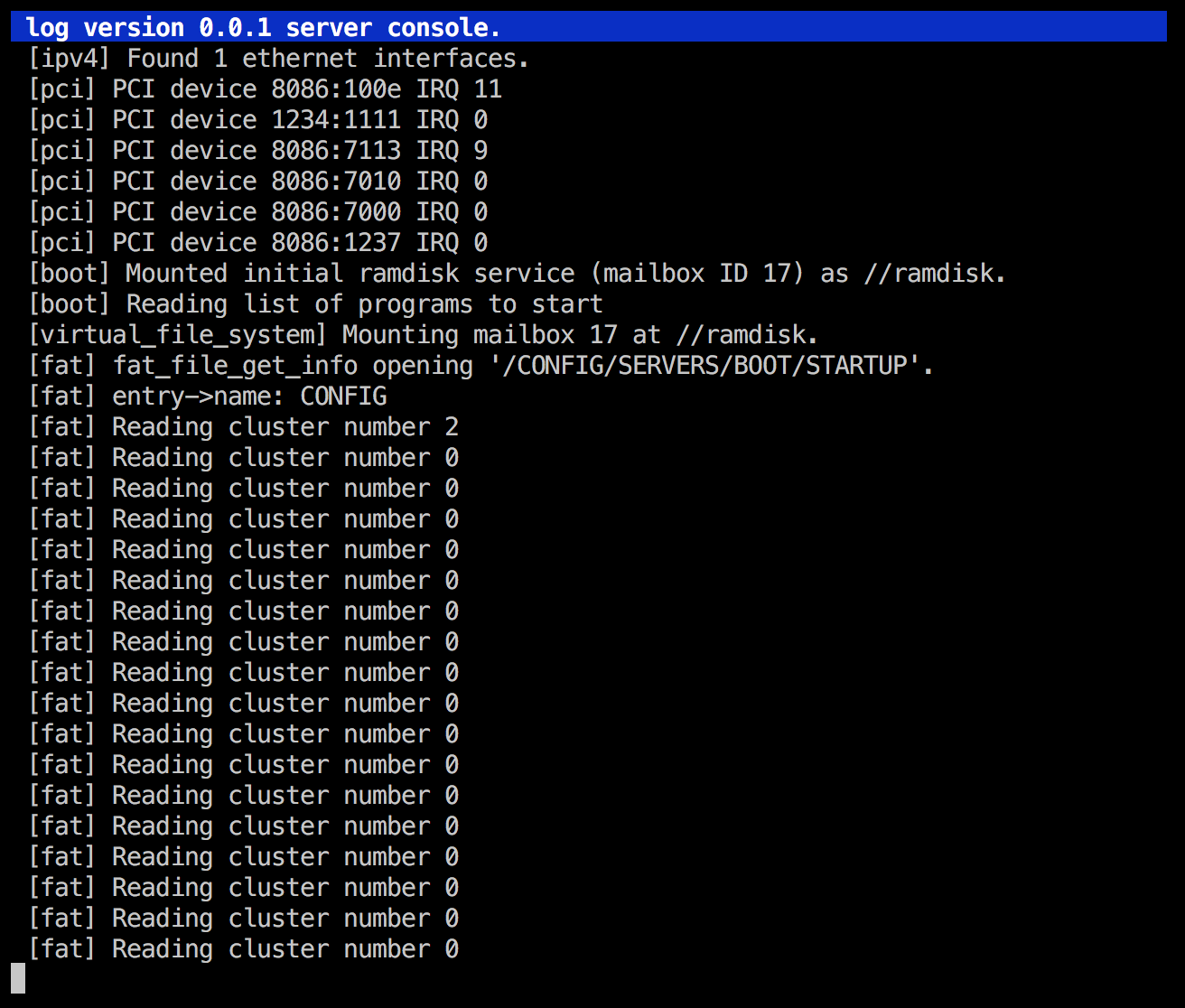

If it was working correctly, this is what it should look like:

- First, the boot server resolves the first available block device. This is supposed to be the initial_ramdisk server.

- It then tells the virtual_file_system server to mount this device as

//ramdisk. - After that, it opens and reads a file named

//ramdisk/config/servers/boot/startup. It parses this file and treats its content as a list of absolute server paths, one path per line. - It loops over the newly parsed list of servers and starts these servers (loading them from the file system and executing the ELF binaries), one by one.

Right now, the first few steps succeed, but when it tries to open the file, it fails miserably, as can be seen above.

We will now look deeply into this issue, but I think at the same time, we should do a bit of a cleanup of the boot server code. As we saw in a previous post, it is rather convoluted so we should refactor it to make it more readable. Many of you who know me from before know that this is actually something I enjoy quite a lot: taking an old, messed up piece of code, and making it shiny, pretty and clean. "Cleaning up the mess" is a task that feels very rewarding to me, and it's a natural application of the boy scout rule - a philosophy regarding software engineering which I believe in very strongly. It simply feels good to leave things in a better state than they were before.

Step one: Refactoring the boot server

So I started doing that. I did a a bunch of changes, trying to find an overall better structure with a main method that looked like this:

int main(void)

{

set_process_and_thread_name();

if (!resolve_log_service())

{

return -1;

}

if (!resolve_vfs_service())

{

return -1;

}

mailbox_id_type initial_ramdisk_id;

if (!resolve_initial_ramdisk_service(&initial_ramdisk_id))

{

return -1;

}

mount_initial_ramdisk(initial_ramdisk_id);

unsigned int file_size;

if (!read_program_list(&file_size))

{

return -1;

}

unsigned int number_of_programs = parse_program_list(file_size);

log_print_formatted(&log_structure, LOG_URGENCY_DEBUG, "Starting %u programs.", number_of_programs);

start_programs(number_of_programs);

system_call_process_parent_unblock();

log_print(&log_structure, LOG_URGENCY_DEBUG, "end of boot");

return 0;

}

Much better and more readable than it previously looked, if you ask me.

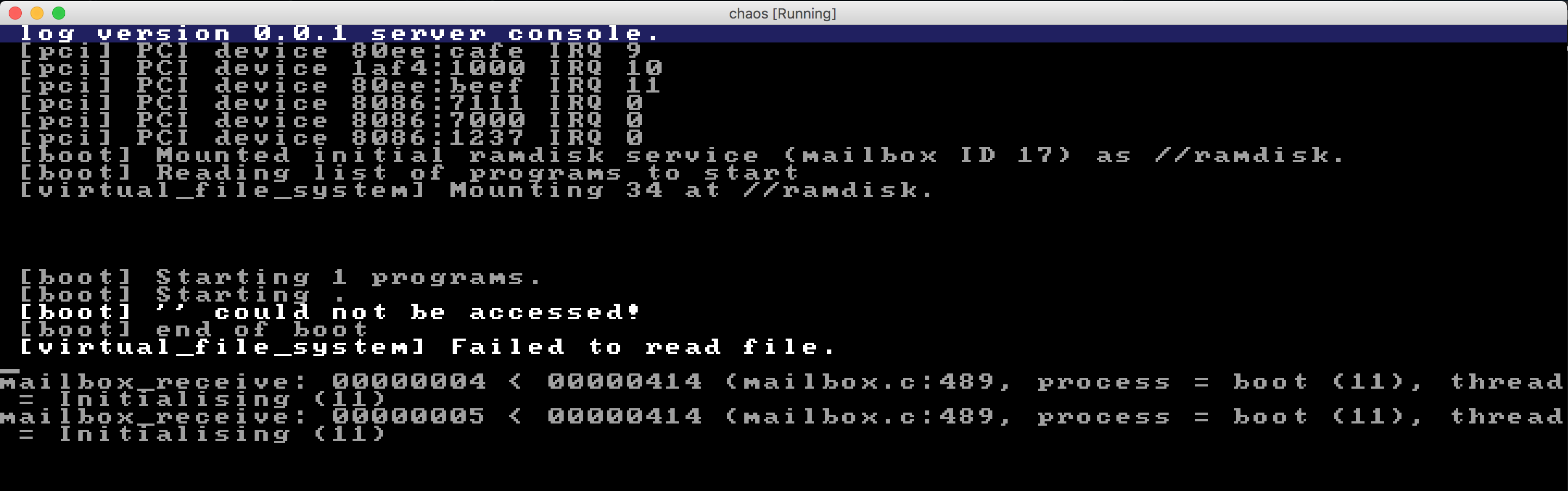

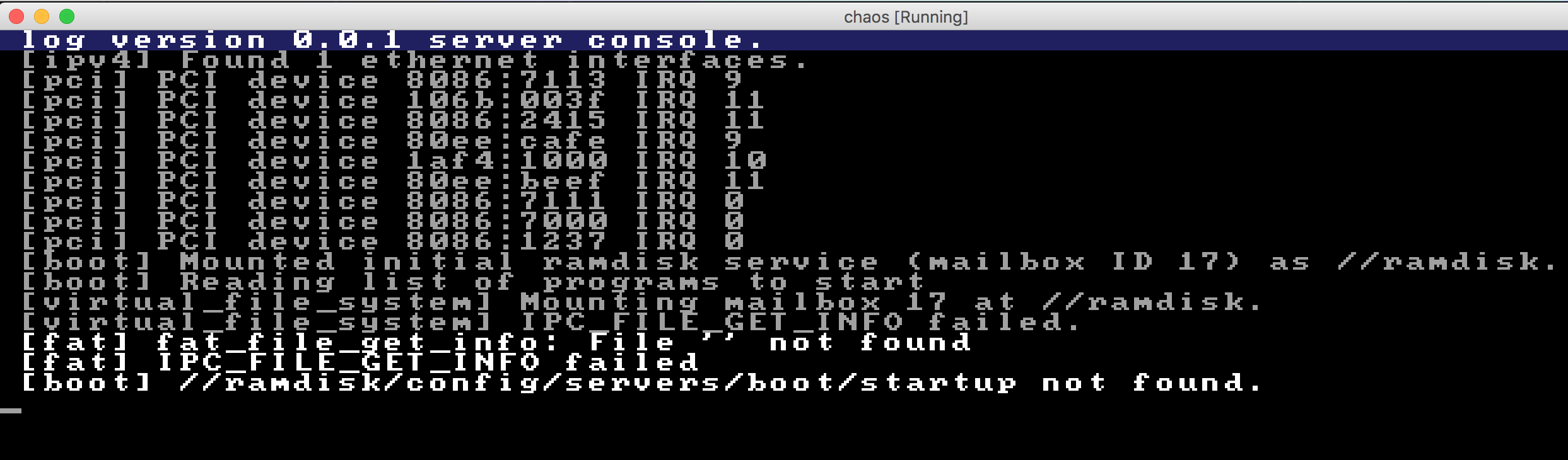

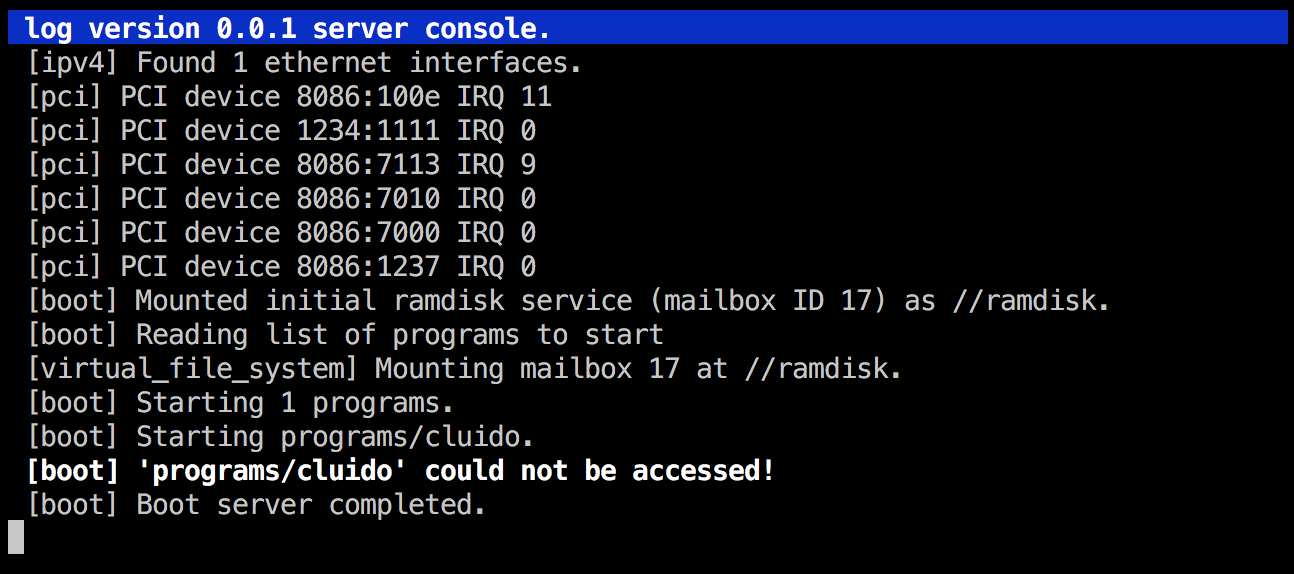

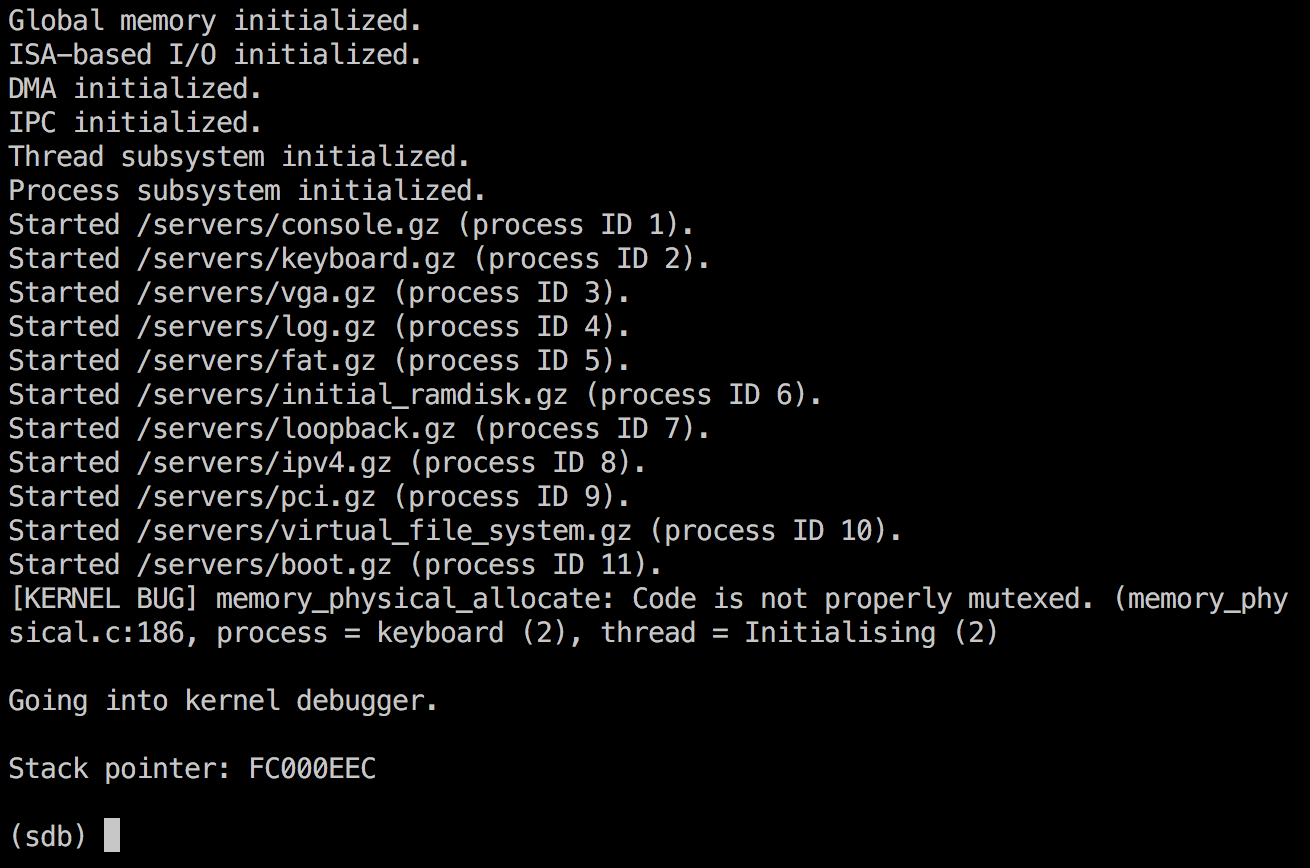

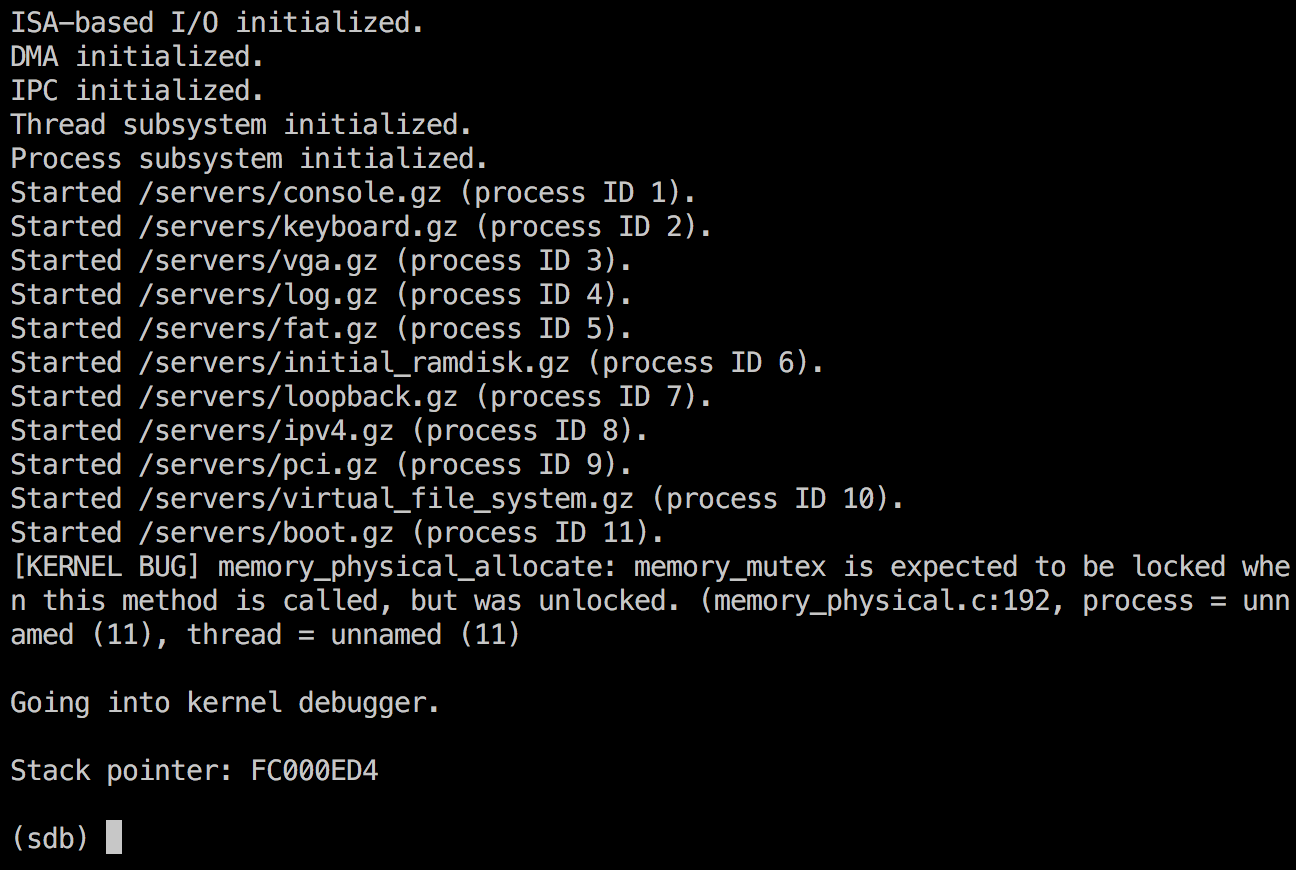

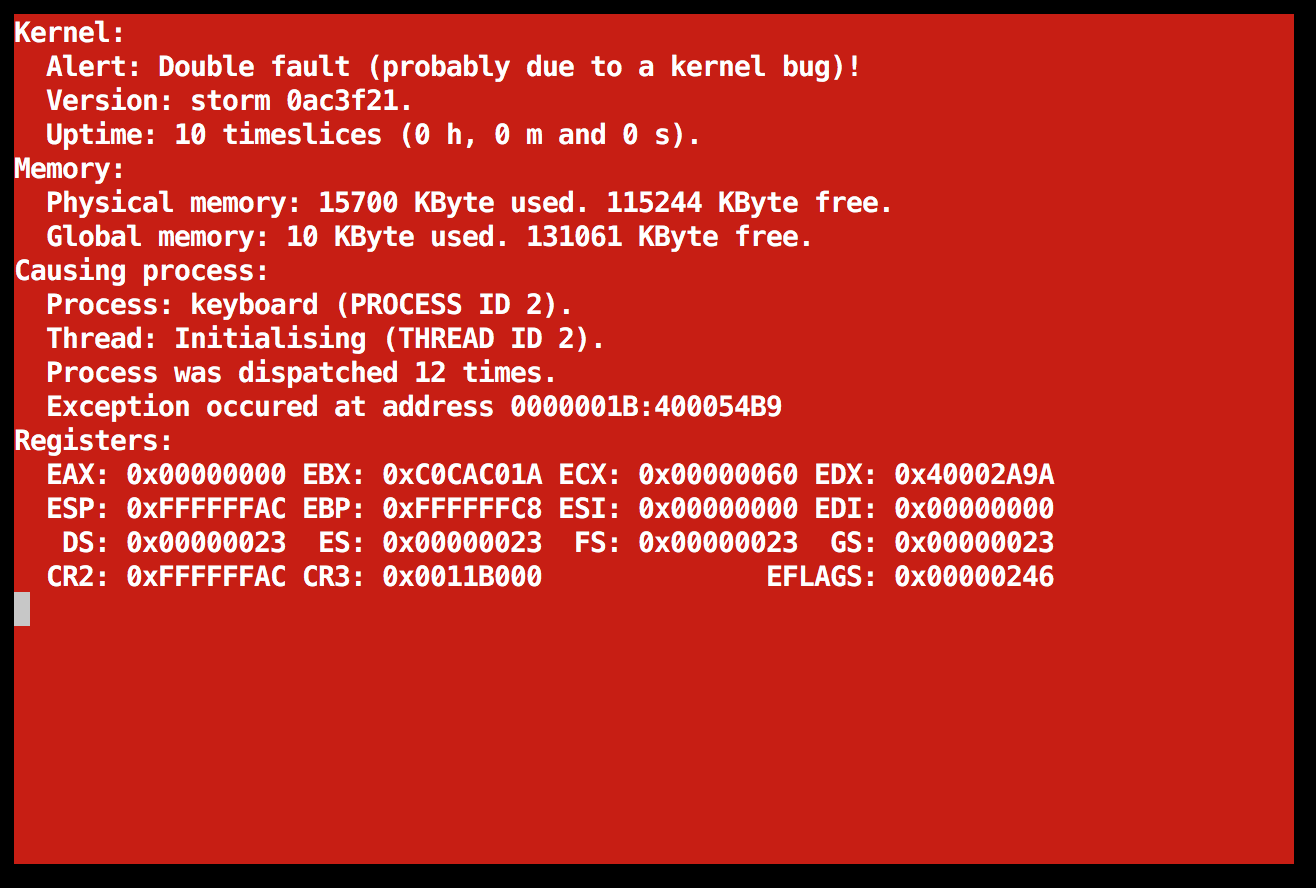

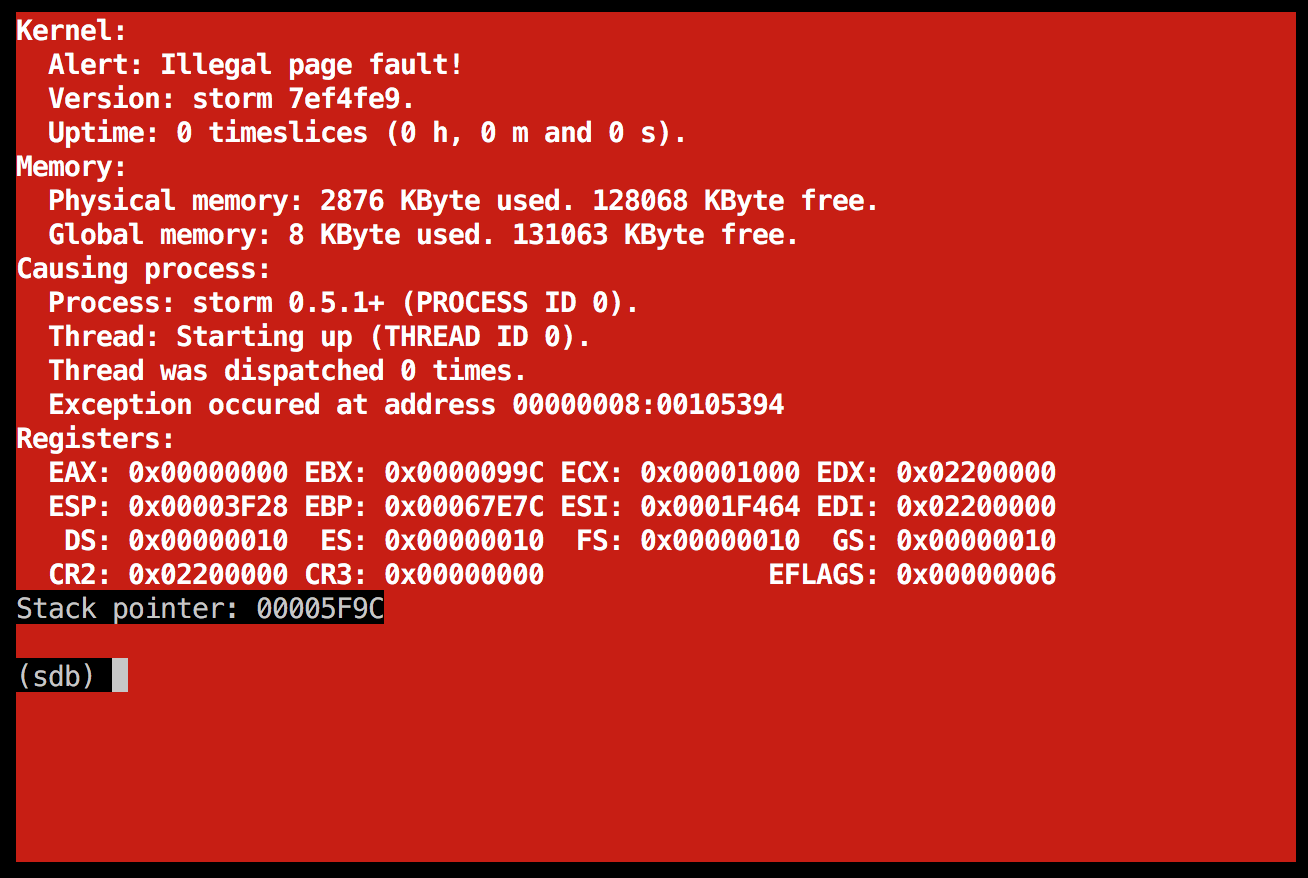

There was only one, slight problem... It now behaved completely differently on startup. Instead of the previous //ramdisk/config/servers/boot/startup not found message seen above, I now got this instead:

Why do we suddenly get three extra blank lines there in the middle?

What file is it failing to read, is it the empty string?

Why does it behave completely differently now? I must have made a mistake indeed...

Fear of making mistakes while refactoring

I think this, things breaking all of a sudden, definitely is one thing holding people back from aggressively refactoring. Sure, I am using a compiled, statically typed language (C in this case), so it's much easier and helpful than with dynamic languages like JavaScript or Ruby, but still - there's always a slight risk of making mistakes. A decent test suite with unit and/or integration tests covering large parts of the system is probably one of the better ways to deal with this. If I had 10 or 100 tests for the boot server, one or more of them would probably be failing now, perhaps leading me in the right direction... but in this case, I regretfully don't have any automated tests at all, so I have to more go with the "read the source code and think" approach instead.

Now, some people would say that this is exactly the reason why they don't like refactoring. Well, people are different, and it also depends greatly on the circumstances. I wouldn't do stuff like this the day before a critical release, but in this case there is really no "deadline" to be afraid of. Also, of course, there is always a risk in doing changes, but there is also a (sometimes even greater) risk in not doing any changes. A risk of "software rot", your program becoming more and more unmaintainable over time. Don't let fear caused by bad experiences in the past control your future. Instead, decide today to take one small step in the right direction - be it refactoring that old code you're thinking about, be it clean out the mess in your locker, be it to contact an old friend and ask for forgiveness for something you did years ago that hurt them... anything that helps you become a better you.

End of preaching, back to coding. ![]()

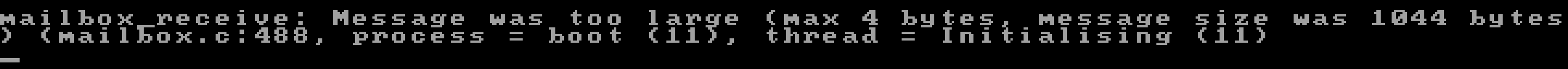

For starters, the mailbox.c message was obviously not very helpful unless you read the source code, so I started by improving that like this:

DEBUG_MESSAGE(DEBUG, "Message was too large (max %u bytes, message size was %u bytes)",

message_parameter->length, mailbox->first_message->length);

But why is it now getting there in the first place? It feels like the IPC messages are "out of sync" somehow, like the one party is sending two messages and the other party receiving only one or something. Then, in the next call, the receiver will receive the now-queued message instead of the one it is expecting to be reading. This could be the case...

Trying to narrow down the problem

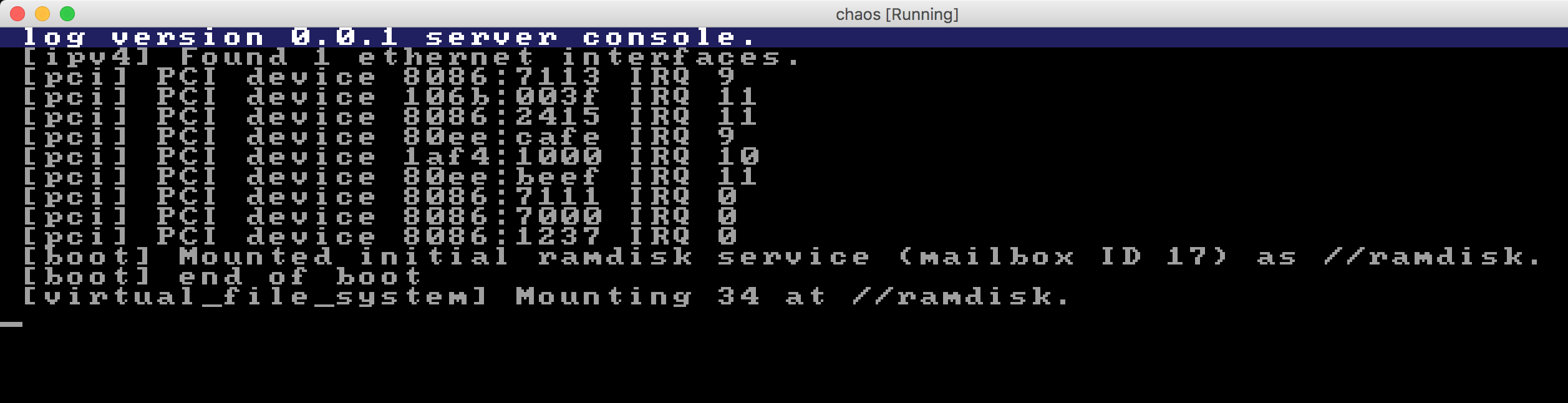

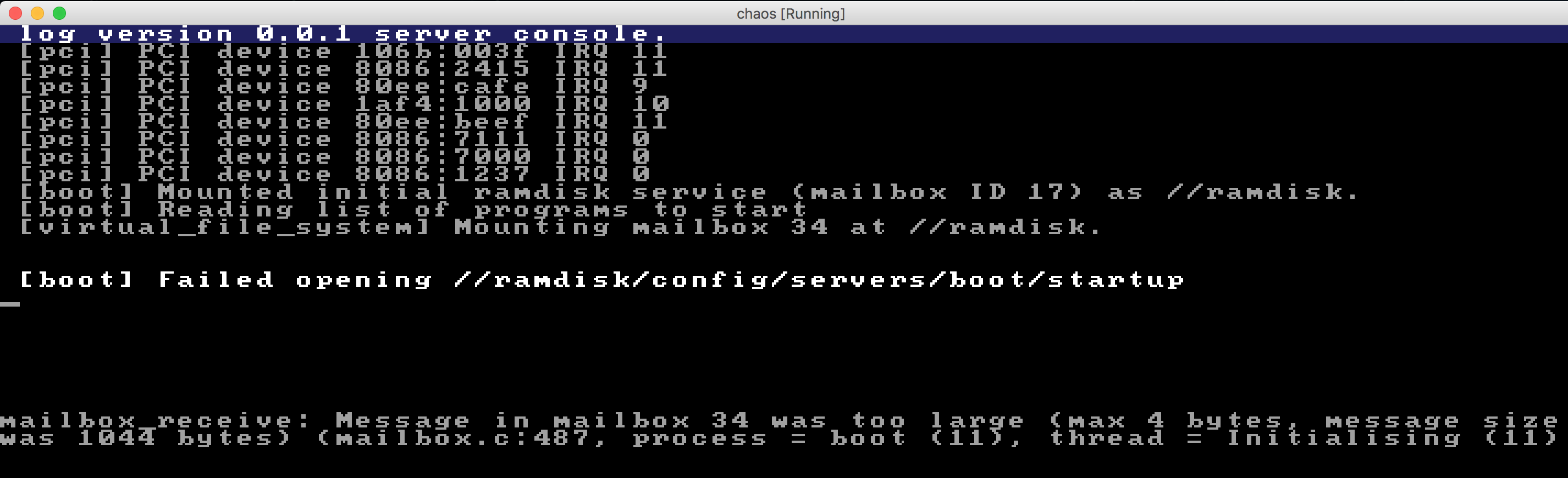

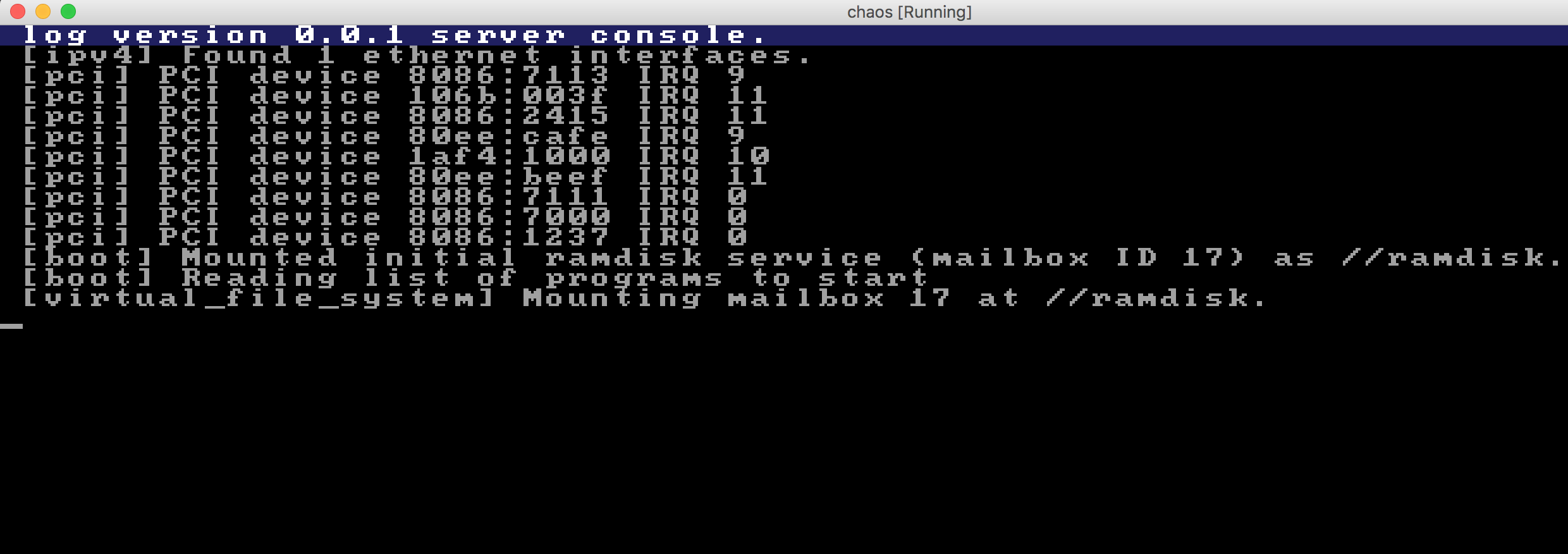

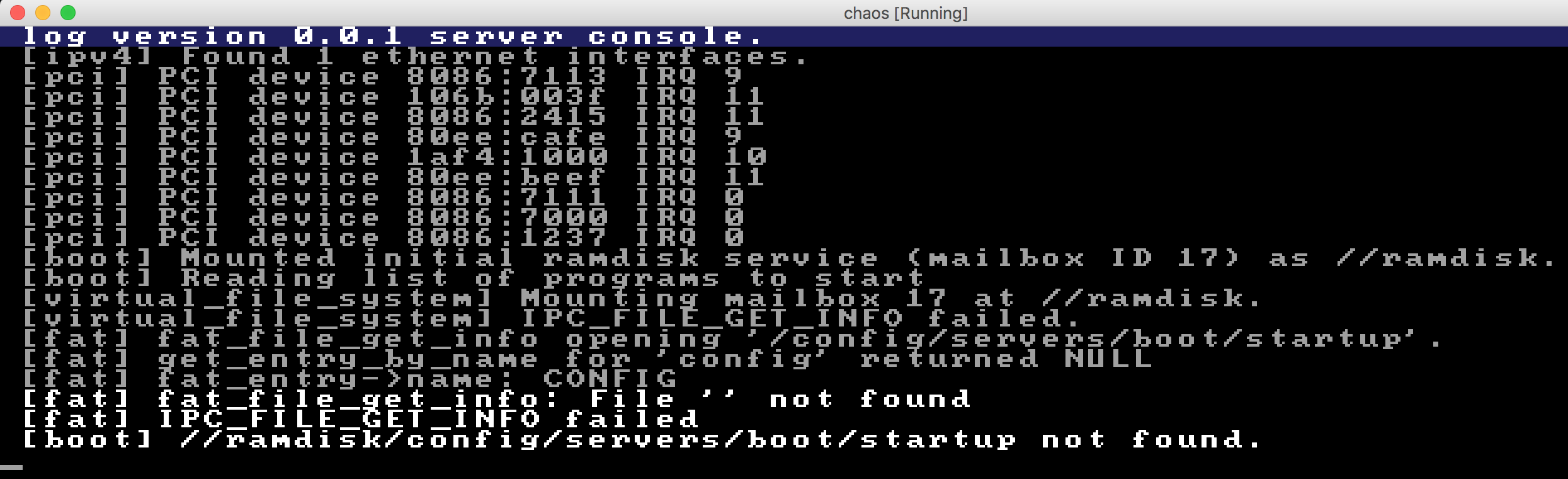

I decided to start by disabling some parts in the boot server to see where it's failing. Let's stop right before it reads the startup script and see the output when booting:

Alright, the error was now pin-pointed to this specific method:

static bool read_program_list(unsigned int *file_size)

{

file_verbose_directory_entry_type directory_entry;

log_print(&log_structure, LOG_URGENCY_DEBUG, "Reading list of programs to start");

string_copy(directory_entry.path_name, STARTUP_FILE);

if (file_get_info(&vfs_structure, &directory_entry) != FILE_RETURN_SUCCESS)

{

log_print(&log_structure, LOG_URGENCY_ERROR, STARTUP_FILE " not found.");

return FALSE;

}

memory_allocate((void **) &program_list_buffer, directory_entry.size);

file_handle_type handle;

file_open(&vfs_structure, STARTUP_FILE, FILE_MODE_READ, &handle);

file_read(&vfs_structure, handle, directory_entry.size, &program_list_buffer);

*file_size = directory_entry.size;

return TRUE;

}

One obvious error to the careful reader is the lack of error handling in the file_open call. If it will fail, the system will just carry on and pretend as if nothing has happened. That's bad, we should make it check the return value from file_open. The file_read method is also pretty bad at informing the reader why reading the file failed. Let's start by adding some more log output in file_read, to make it easier to debug issues like this. Again, simply applying the boy scout rule - small steps, making the universe a slightly better place with each step we take.

While adding some more log output to file_read, I saw that the problem now is that it tries to read from a non-existing file handle 0, so I'm quite sure that the root cause right now for the strange behavior is that the file_open call has started to fail, but it doesn't log anything there and the boot server just carries on happily, so that's why we get this. The error handling for the rest (parsing the file contents, starting the servers) is seemingly broken now after the refactoring, so instead of just logging that reading the file failed, it will try to do crazy things which will break badly. ![]() So I think that perhaps fixing the debug logging, and also implement proper error handling for the fail scenarios will make it at least stop right away when things go wrong...

So I think that perhaps fixing the debug logging, and also implement proper error handling for the fail scenarios will make it at least stop right away when things go wrong...

(I also found out places in the VFS server that didn't properly check return values, so I fixed these as well.)

Still wandering in the dark

I still wasn't quite sure what was happening; the added error handling and log output didn't give me that much yet. However, I was seeing now that this code:

file_handle_type handle;

if (file_open(&vfs_structure, STARTUP_FILE, FILE_MODE_READ, &handle) != FILE_RETURN_SUCCESS)

{

log_print(&log_structure, LOG_URGENCY_ERROR, "Failed opening " STARTUP_FILE);

return FALSE;

}

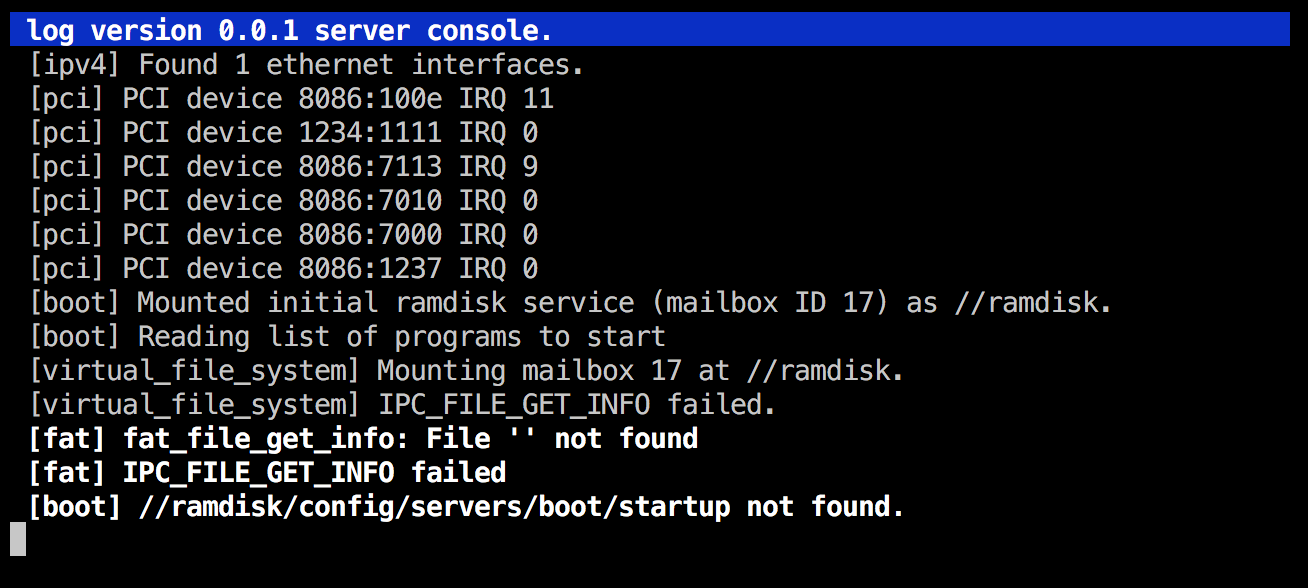

...would cause this output:

But why?

The file_open function is unfortunately again an example of a function with inadequate error handling:

return_type file_open(ipc_structure_type *vfs_structure, char *file_name, file_mode_type mode,

file_handle_type *handle)

{

message_parameter_type message_parameter;

file_open_type open;

string_copy_max(open.file_name, file_name, MAX_PATH_NAME_LENGTH);

open.mode = mode;

message_parameter.protocol = IPC_PROTOCOL_FILE;

message_parameter.message_class = IPC_FILE_OPEN;

message_parameter.data = &open;

message_parameter.block = TRUE;

message_parameter.length = sizeof(file_open_type);

system_call_mailbox_send(vfs_structure->output_mailbox_id, &message_parameter);

message_parameter.data = handle;

message_parameter.length = sizeof(file_handle_type);

system_call_mailbox_receive(vfs_structure->input_mailbox_id, &message_parameter);

return FILE_RETURN_SUCCESS;

}

Let's fix that. Both system_call_mailbox_send and system_call_mailbox_receive have a slight chance of failing, so we need to take that into account here.

We're now getting this. At least a different output. ![]()

Maybe it's time to bring out gdb again. The downside of it is that we might get spurious breaks in other processes than the boot server, but it might be worth it anyway.

gdb to the rescue

gdb hinted me that the system_call_mailbox_send was actually failing, which is incredibly weird if that's really the case. The annoying thing with debugging this in gdb is that part of the address space is taken care of by servers/system/boot/boot, and other parts by the storm kernel binary, so we can't point gdb to a single executable to be able to place breakpoints on specific function names, see the values of variables etc. Then we also have the whole multi-process and multi-thread architecture making it harder; the whole debug experience is simply very much sub-par at the moment. So maybe I'll just dig into the mailbox_send source code in the kernel for now...

Hmm, took a quick look there but couldn't find any obvious explanation to this. All the places where it can fail should print a debug message in that case, so it shouldn't really be the mailbox sending that is failing.

(This was again a very late night session, when I should have been going to be instead of sitting up debugging, but... I just... want to... fix that bug...

)

I enabled VERBOSE_DEBUG in mailbox.c, but just like the comment there stated, it makes the system unusable since the kernel log gets flooded with output...

Then I had an idea. Seems obvious, but: the mailbox_receive complains that it cannot receive the message because it's too large. What I use the gdb approach again and put a conditional breakpoint in mailbox_send, like this: break mailbox_send if mailbox_id == 34, couldn't that help?

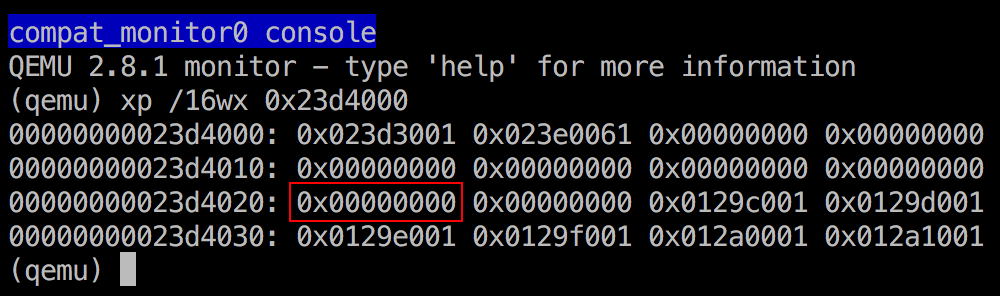

By the way - mailbox ID 34, that seems incredibly weird. I mean, look at this screen shot:

The way it seems to be like is this:

- The VFS server is attempting to mount the initial ramdisk using mailbox 34 as the

//ramdiskvolume. - Then the boot server tries to open a file, this time reading from mailbox 34 (the

mailbox_receivelog output being printed.) - That doesn't compute. It seems like the boot server is telling VFS server to send the actual block service traffic to mailbox 34, i.e. our own connection to the VFS, which would cause exactly these spurious packages to appear in the mailbox. The

bootserver receives the traffic intended for the initial ramdisk server!

It's not a bug, it's a "feature"!

I think it actually boils down to the currently broken/unimplemented functionality now. I ran git blame on servers/file_system/virtual_file_system/virtual_file_system.c. git blame is a great tool when you want to discover how and when you have failed. ![]() Unfortunately, it didn't help since the code has looked like this all the way since 2007. I can't understand how this have ever worked...

Unfortunately, it didn't help since the code has looked like this all the way since 2007. I can't understand how this have ever worked...

The next step will be to fix the virtual_file_system service to not use the incoming ipc_structure for registering the mount point. Instead, it should use the incoming mailbox_id and based on that, create an IPC connection. So I think we are now down to the root cause - the VFS mounting failing. The thing that probably confused me a bit was the fact that it behaved somewhat differently (mailbox_receive printing log messages.) But let's do something hard - let's try to ignore that fact for now and instead resolve the problem.

The fix for this wasn't actually so complicated. Instead of the vfs_mount function looking like this:

static bool vfs_mount(file_mount_type *mount, ipc_structure_type *ipc_structure)

{

memory_copy(&mount_point[mounted_volumes].ipc_structure, ipc_structure, sizeof(ipc_structure_type));

mount_point[mounted_volumes].handled_by_vfs = FALSE;

string_copy_max(mount_point[mounted_volumes].location, mount->location, MAX_PATH_NAME_LENGTH);

mounted_volumes++;

log_print_formatted(&log_structure, LOG_URGENCY_INFORMATIVE,

"Mounting mailbox %u at //%s.",

ipc_structure->output_mailbox_id, mount->location);

return TRUE;

}

...it should look like this. The ipc_service_connection_request call is the key difference.

static bool vfs_mount(file_mount_type *mount)

{

mount_point[mounted_volumes].ipc_structure.output_mailbox_id = mount->mailbox_id;

if (ipc_service_connection_request(&mount_point[mounted_volumes].ipc_structure) != IPC_RETURN_SUCCESS)

{

log_print_formatted(&log_structure, LOG_URGENCY_ERROR,

"vfs_mount: Failed connecting to service with mailbox %u", mount->mailbox_id);

return FALSE;

}

mount_point[mounted_volumes].handled_by_vfs = FALSE;

string_copy_max(mount_point[mounted_volumes].location, mount->location, MAX_PATH_NAME_LENGTH);

mounted_volumes++;

log_print_formatted(&log_structure, LOG_URGENCY_INFORMATIVE,

"Mounting mailbox %u at //%s.",

mount->mailbox_id, mount->location);

return TRUE;

}

Some other trivial errors in other servers surfaced now as well, which were easily fixable.

All errors gone, but still not working

But now, something odd happened. I didn't receive any errors any more, but it still didn't continue the booting...

I don't know about you, but to me, problems like this are some of the more annoying ones. It doesn't work, but I don't know why and no errors whatsoever are printed.

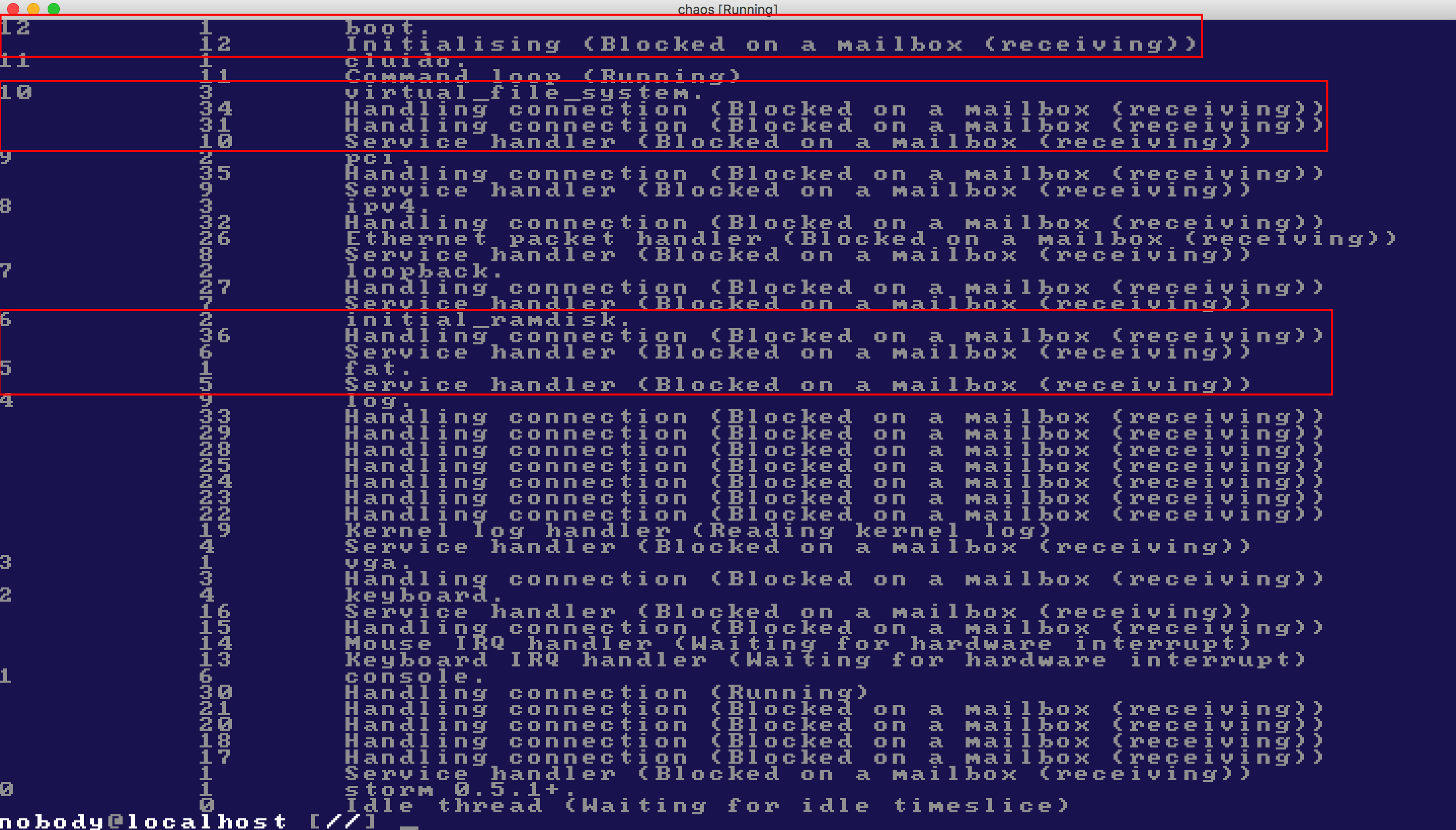

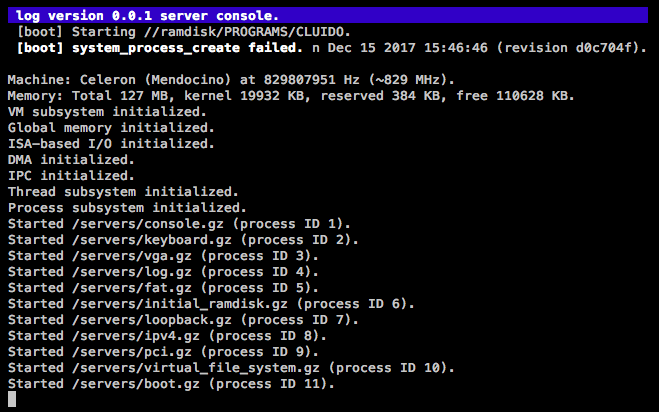

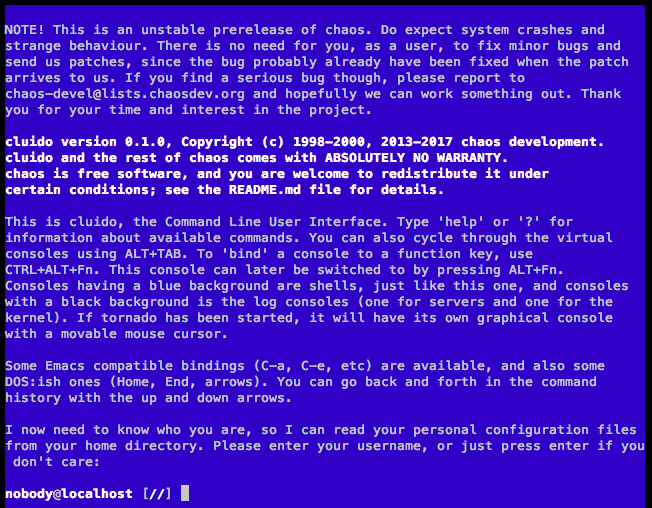

I thought at this point that enabling cluido on startup would perhaps be a good idea; it has a command which lets you see all threads of all processes and what they are blocked on. Good for debugging!

Here is what it looked like, with some highlightings of mine to make it clearer to the reader:

So the conclusion right now (these are in red):

- The

bootserver is waiting on a mailbox - not that surprising; that's pretty much what I'd have expected. - The

virtual_file_systemserver is waiting on two mailboxes, also receiving. That's a bit strange, could it be that it has tried to contact another server but stalled in doing so? (Side note: we should implement a timeout inmailbox_receive, for cases where you don't want a server to stall indefinitely just because it never receives an expected answer...) - The

initial_ramdiskis handling a connection, and has a service handler. Looks good. - The

fatserver has a service handler only, and this is probably the real problem here.

Realizing the serious flaw in my algorithm

During the day, when being mostly away from th ecomputer, I began thinking what the problem could be: was there was a fundamental flaw in the new vfs_mount algorithm? I then looked at the code, which confirmed my suspicion: The newly added code made it connect to the provided block service, using the standard IPC mechanisms built-in to the system. So it looked like this:

+---------------------+ +-----------------+

| virtual_file_system | --> | initial_ramdisk |

+---------------------+ +-----------------+

Do you see the obvious issue here? One significant layer of functionality is missing, namely, the server responsible for actually parsing the filesystem. A proper IPC structure would look like this, in this specific case (since the initial ramdisk is formatted with a FAT filesystem):

+---------------------+ +-----+ +-----------------+

| virtual_file_system | --> | fat | --> | initial_ramdisk |

+---------------------+ +-----+ +-----------------+

In other words, the VFS server must tell the fat server to mount the initial_ramdisk, and the VFS server will then only communicate with the fat server to get things done. That's the reason why things are not working now; the VFS server is sending its IPC commands to the wrong server, a server not being able to interpret the file_system protocol, so that's why it never gets any meaningful replies... ![]()

So, what I needed to do was to tweak the vfs_mount method lookup whatever file_system services was available and use it to mount the block device, instead of going straight to the initial_ramdisk.

Here is the new version of the function:

static bool vfs_mount(file_mount_type *mount)

{

// FIXME: We only support one file_system implementation for now. A proper implementation would gather a more comprehensive

// list of file system services, attempt to mount the volume with them all and see which one is successful.

mailbox_id_type mailbox_id[1];

unsigned int services = 1;

if (ipc_service_resolve("file_system", mailbox_id, &services, 5, &empty_tag) != IPC_RETURN_SUCCESS)

{

log_print_formatted(&log_structure, LOG_URGENCY_ERROR, "vfs_mount: Failed to resolve file_system service");

return FALSE;

}

mount_point[mounted_volumes].ipc_structure.output_mailbox_id = mailbox_id[0];

if (ipc_service_connection_request(&mount_point[mounted_volumes].ipc_structure) != IPC_RETURN_SUCCESS)

{

log_print_formatted(&log_structure, LOG_URGENCY_ERROR,

"vfs_mount: Failed to establish connection with file system service with mailbox ID %u",

mailbox_id[0]);

return FALSE;

}

message_parameter_type message_parameter;

message_parameter.protocol = IPC_PROTOCOL_FILE;

message_parameter.message_class = IPC_FILE_MOUNT_VOLUME;

message_parameter.length = sizeof(mailbox_id_type);

message_parameter.data = &mount->mailbox_id;

if (ipc_send(mount_point[mounted_volumes].ipc_structure.output_mailbox_id, &message_parameter) != IPC_RETURN_SUCCESS)

{

log_print_formatted(&log_structure, LOG_URGENCY_ERROR,

"vfs_mount: Failed to send IPC message to mailbox ID %u",

mailbox_id[0]);

return FALSE;

}

mount_point[mounted_volumes].handled_by_vfs = FALSE;

string_copy_max(mount_point[mounted_volumes].location, mount->location, MAX_PATH_NAME_LENGTH);

mounted_volumes++;

log_print_formatted(&log_structure, LOG_URGENCY_INFORMATIVE,

"Mounting mailbox %u at //%s.",

mount->mailbox_id, mount->location);

return TRUE;

}

Slightly more complex than before, and even worse; it didn't even work. Here's the error I got on startup:

Sigh. As C3PO would have it, will this never end? When I thought I had the resolution in place, new errors are coming up... ![]()

Digging into the fat server

The part of the fat server that is printing this message looks like this:

case IPC_FILE_GET_INFO:

{

if (mounted)

{

file_verbose_directory_entry_type *directory_entry = (file_verbose_directory_entry_type *) data;

if (!fat_file_get_info(&fat_info, directory_entry))

{

return_type return_value = FILE_RETURN_FILE_ABSENT;

log_print_formatted(&log_structure, LOG_URGENCY_ERROR, "IPC_FILE_GET_INFO failed");

message_parameter.message_class = IPC_FILE_RETURN_VALUE;

message_parameter.data = &return_value;

message_parameter.length = sizeof(return_type);

}

ipc_send(ipc_structure.output_mailbox_id, &message_parameter);

}

break;

}

So, fat_file_get_info is failing for some reason... I wonder why. To learn more about why things were failing, I added some more logging in the fat server. This was the output now:

I think this was the time to bring out gdb again... Here's the (somewhat abbreviated) session:

vagrant@debian-9rc1-i386:/vagrant$ gdb servers/file_system/fat/fat

GNU gdb (Debian 7.12-6) 7.12.0.20161007-git

[...]

Reading symbols from servers/file_system/fat/fat...done.

(gdb) break fat_file_get_info

Breakpoint 1 at 0x40001ab0: file fat_file_get_info.c, line 12.

(gdb) cont

Continuing.

Breakpoint 1, fat_file_get_info (fat_info=0xfc000f14, file_info=0x7d7000) at fat_file_get_info.c:12

12 {

(gdb) next

13 unsigned int elements = MAX_PATH_ELEMENTS;

(gdb)

18 log_print_formatted(&log_structure, LOG_URGENCY_DEBUG, "fat_file_get_info opening '%s'.", file_info->path_name);

(gdb)

22 path_split(file_info->path_name, path, &elements);

(gdb) file_info->path_name

Undefined command: "file_info->path_name". Try "help".

(gdb) print file_info->path_name

$1 = "/config/servers/boot/startup\000\000startup", '\000' <repeats 771 times>...

(gdb) next

25 if (elements < 1)

(gdb) print file_info->path_name

$2 = "\000config\000servers\000boot\000startup\000\000startup", '\000' <repeats 771 times>...

(gdb) print elements

$3 = 4

Hmm, that's interesting. The file_info->path_name seems to contain some extra garbage at the end. That should be fine though, since it's a null-terminated string and it will just happen to contain whatever happened to be at that memory (probably a stack-based variable) at the time it was created.

Let's continue a few source lines:

(gdb) next

34 if (!fat_directory_read(fat_info, path, elements - 1, &fat_entry))

(gdb)

42 our_file = get_entry_by_name(fat_entry, path[elements - 1]);

(gdb) print fat_entry

$1 = (fat_entry_type *) 0x400094c0 <directory_buffer>

(gdb) print *fat_entry

$2 = {name = "CONFIG\000 ", extension = "\000 ", read_only = 0 '\000', hidden = 0 '\000', system = 0 '\000', volume_id = 0 '\000',

directory = 1 '\001', archive = 0 '\000', reserved = 0 '\000', nt_reserved = 8 '\b', creation_hundreds = 0 '\000', creation_time = {

hours = 0, minutes = 50, seconds = 16}, creation_date = {year = 72, month = 6, day = 9}, access_date = {year = 72, month = 6,

day = 9}, first_cluster_number_high = 0, update_time = {hours = 0, minutes = 50, seconds = 16}, update_date = {year = 72, month = 6,

day = 9}, first_cluster_number_low = 2, file_size = 0, next = 0x400094e0 <directory_buffer+32> ""}

(gdb) next

45 if (our_file == NULL)

(gdb) print our_file

$3 = (fat_entry_type *) 0x0

(gdb) print path[elements - 1]

$4 = 0x7d7015 "startup"

Hmm... *scratches head*...

config is the name of the root-level folder. In fact, this is what it looks like, the whole folder structure of the ramdisk (courtesy of mtools):

Volume in drive U has no label

Volume Serial Number is F50C-F520

Directory for U:/

config <DIR> 2017-10-08 16:50

1 file 0 bytes

Directory for U:/config

. <DIR> 2017-10-08 16:50

.. <DIR> 2017-10-08 16:50

servers <DIR> 2017-10-08 16:50

3 files 0 bytes

Directory for U:/config/servers

. <DIR> 2017-10-08 16:50

.. <DIR> 2017-10-08 16:50

boot <DIR> 2017-10-08 16:50

3 files 0 bytes

Directory for U:/config/servers/boot

. <DIR> 2017-10-08 16:50

.. <DIR> 2017-10-08 16:50

startup 16 2017-10-08 16:50

3 files 16 bytes

Total files listed:

10 files 16 bytes

16 717 824 bytes free

By looking at the get_entry_by_name source code, I see that the fat_entry seems to be an indexable array. Let's dig a bit further into and see what other entries we have there (if any.)

(gdb) print fat_entry[1]

$5 = {name = "\000\000\000\000\000\000\000", extension = "\000\000", read_only = 0 '\000', hidden = 0 '\000', system = 0 '\000',

volume_id = 0 '\000', directory = 0 '\000', archive = 0 '\000', reserved = 0 '\000', nt_reserved = 0 '\000',

creation_hundreds = 0 '\000', creation_time = {hours = 0, minutes = 0, seconds = 0}, creation_date = {year = 0, month = 0, day = 0},

access_date = {year = 0, month = 0, day = 0}, first_cluster_number_high = 0, update_time = {hours = 0, minutes = 0, seconds = 0},

update_date = {year = 0, month = 0, day = 0}, first_cluster_number_low = 0, file_size = 0, next = 0x40009500 <directory_buffer+64> ""}

Okay, a null-terminated string. So that means the end of the file allocation table for this folder. But why did it give us the root folder instead of the proper subfolder?

I think we need to go back one step, to the fat_directory_read function. But first, let's see what the fat_info looks like, just for reference:

(gdb) print *fat_info

$7 = {sectors_per_cluster = 4, bytes_per_sector = 512, first_data_sector = 100, root_directory_sectors = 32, bytes_per_cluster = 2048,

block_structure = {input_mailbox_id = 46, output_mailbox_id = 47}, fat = 0x40005340 <global_fat>, root = 0x4000d8c0 <global_root>,

bits = 16}

I noted that the fat_directory_read had some useful debug logging there in the code which was commented out, so I uncommented that and re-booted the system.

Here is what it looked like at this point:

A potential issue: lower-case vs upper-case

The careful reader will perhaps start thinking like me when seeing these messages: could the difference between upper-case and lower-case config and upper-case CONFIG be a part of the problem here? Good question!

The thing is that the LFN (long file name) support for FAT file systems was not originally there, it was a hack that Microsoft added at the time of Windows 95, to be able to compete with other systems (*cough* OS/2

) which already had long file systems, and a much superior system architecture in general. But just like we know, the technically superior doesn't always win and this was sadly the case here also. Win95 "won" the consumer operating system war over OS/2, and so we ended up using a 32-bit graphical frontend on top of a 16-bit operating system etc... you know what I mean.

Anyway, suffice it to say that long file names aren't natively supported on FAT. And, case in file names is handled a bit specially, if I remember correctly: the MS-DOS (short) names are always uppercase. So what I am suspecting here is that mtools isn't really generating any (lower-case) LFN entries for us in this case => only the CONFIG folder do exist, and our string comparisons are probably case sensitive (which they should probably not be in the case of FAT, since it's a case-preserving but case-insensitive file system by design.)

This is easily tested: let's just change the path of the startup script in the boot server:

#define STARTUP_FILE "//ramdisk/CONFIG/SERVERS/BOOT/STARTUP"

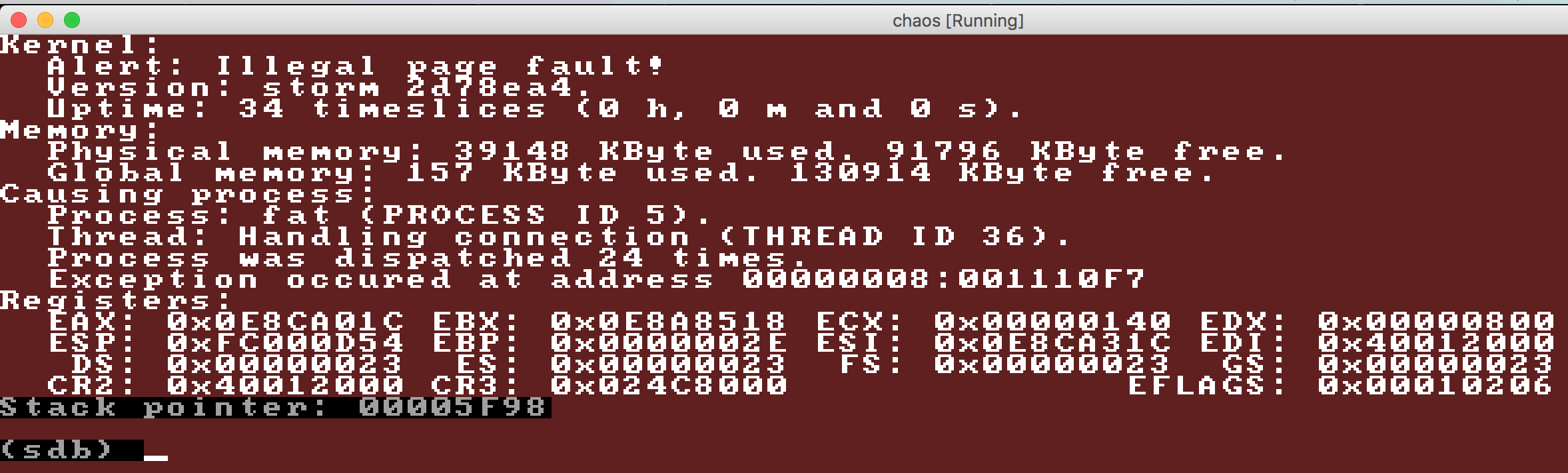

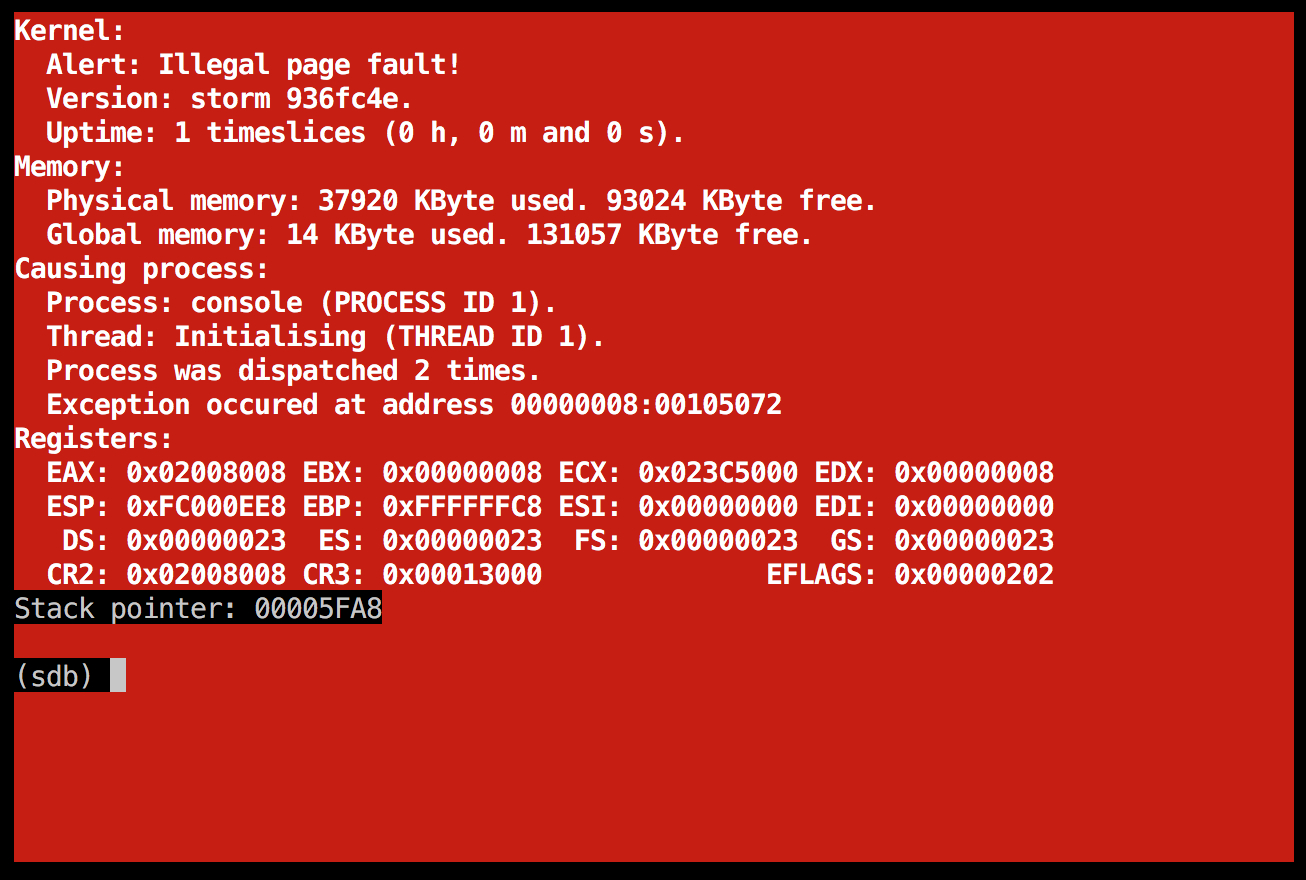

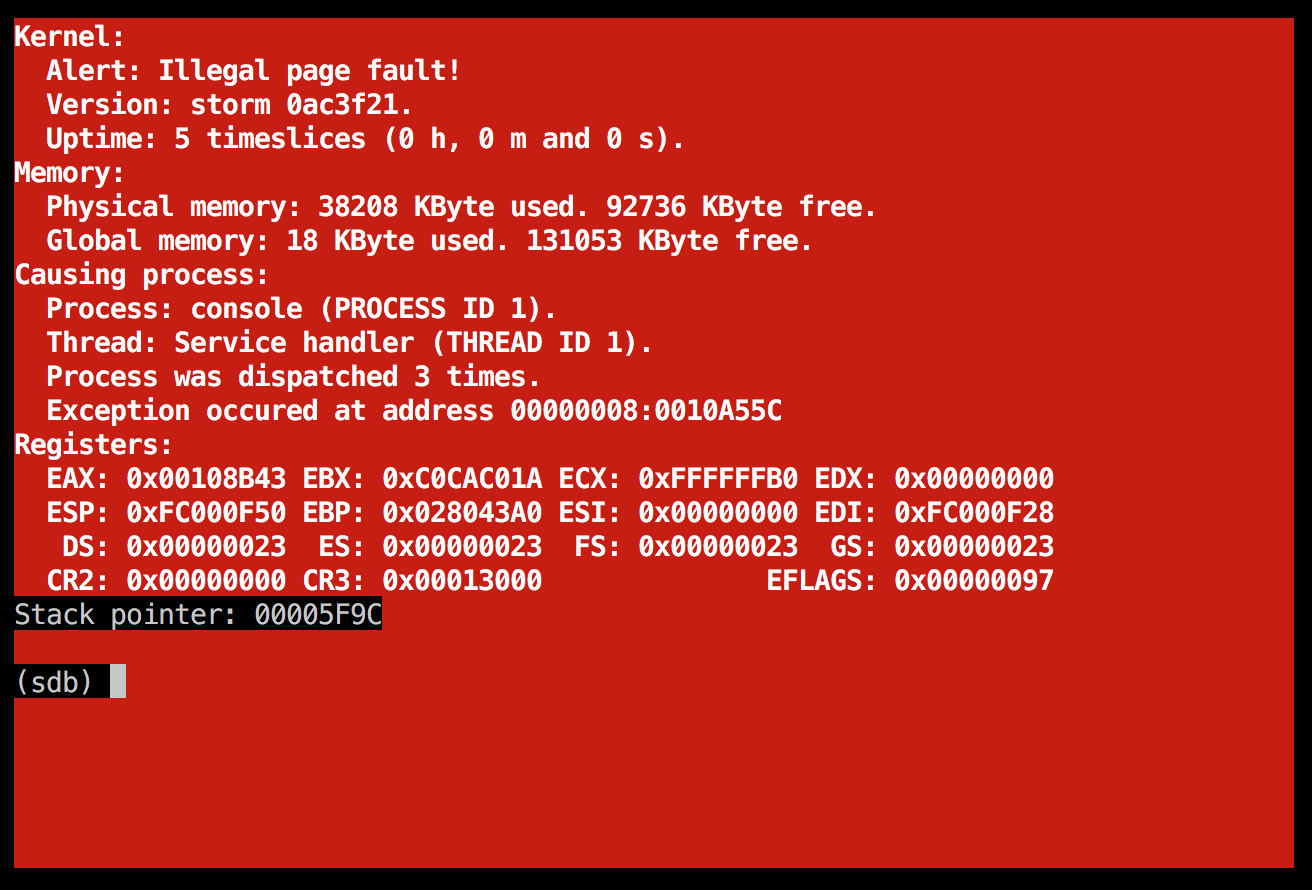

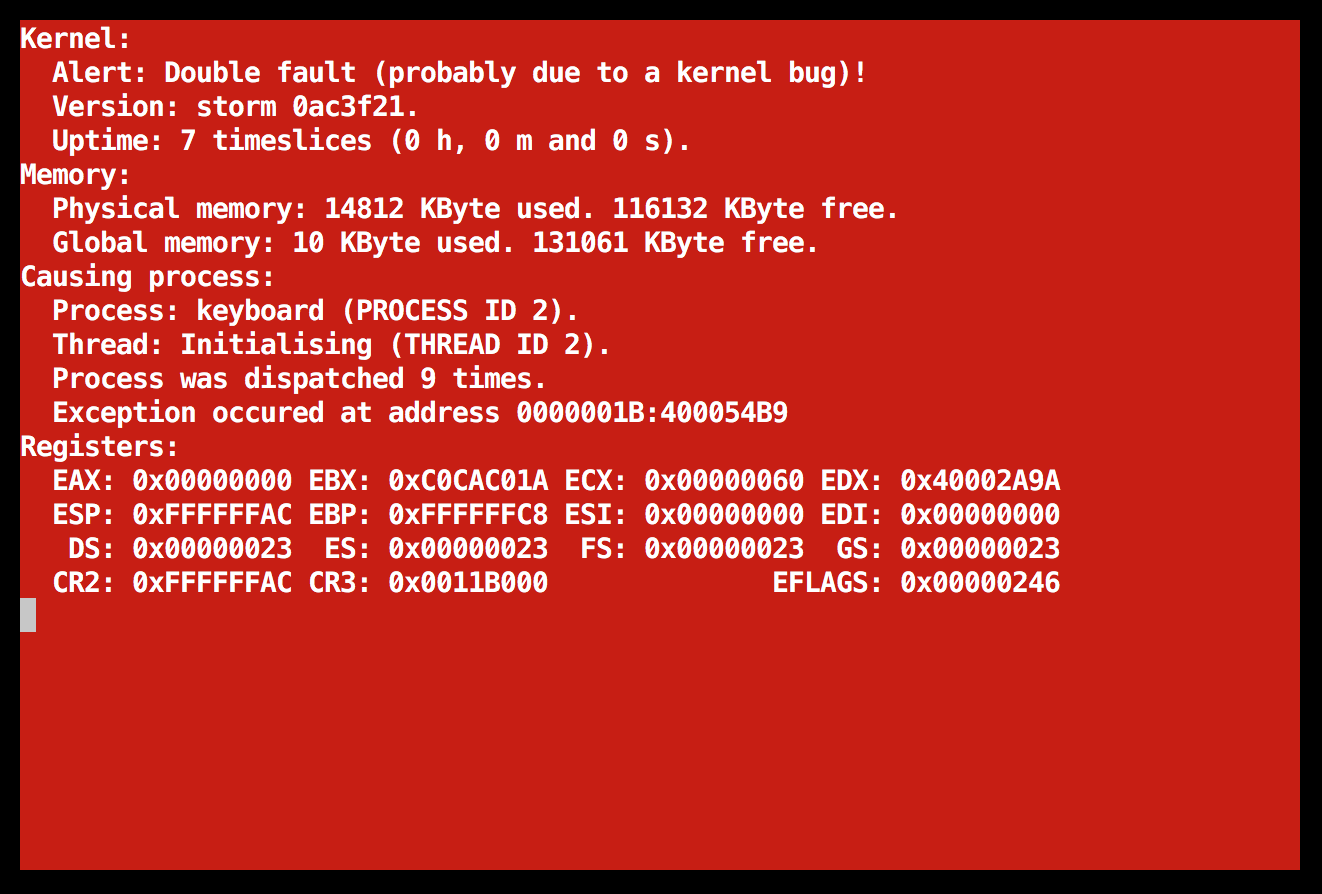

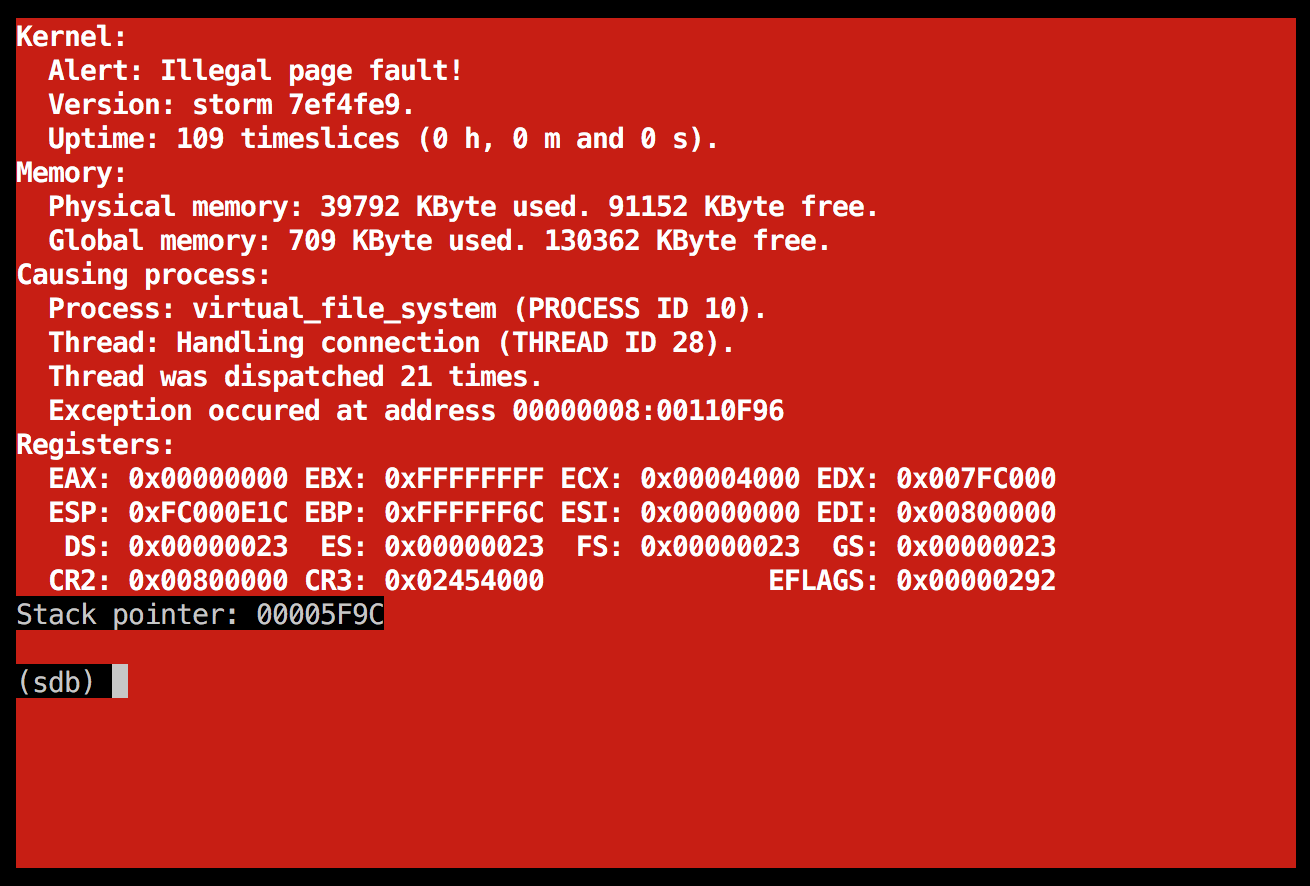

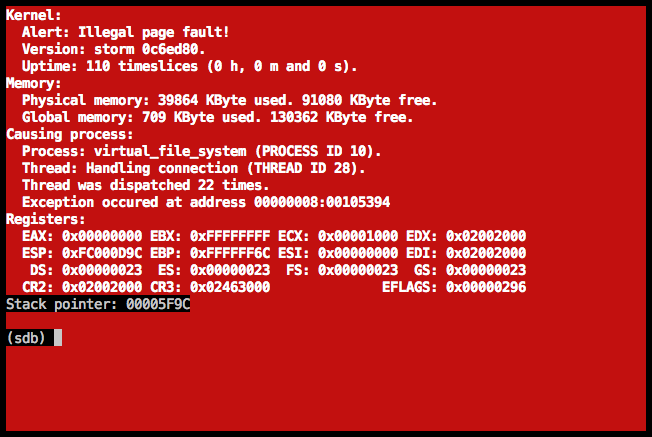

I recompiled and rebooted (again...), just to see this:

Those are the times when you pretty much feel like throwing the machine out the window, but let's not do that yet. :godmode: Well, at least it behaves differently, that's for sure!

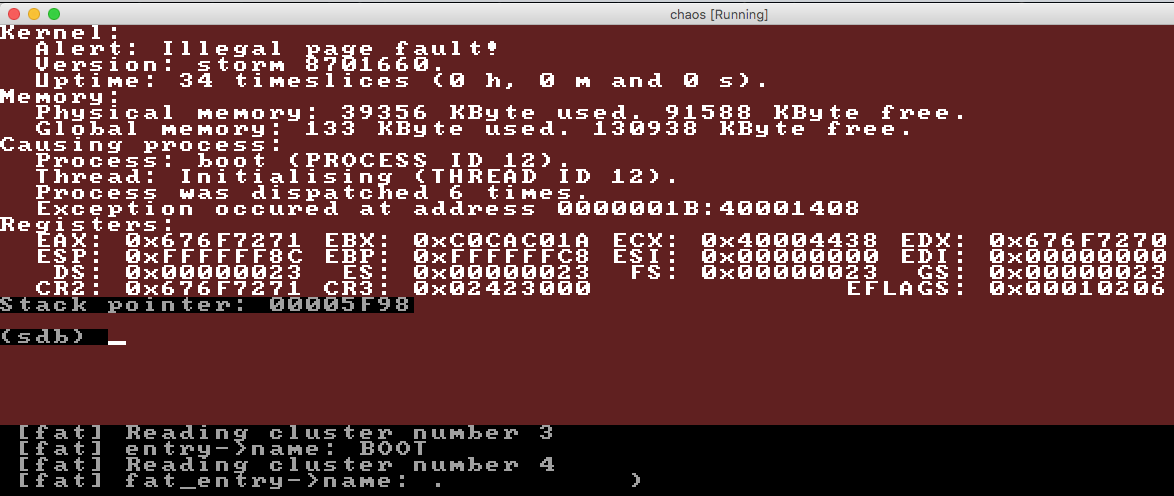

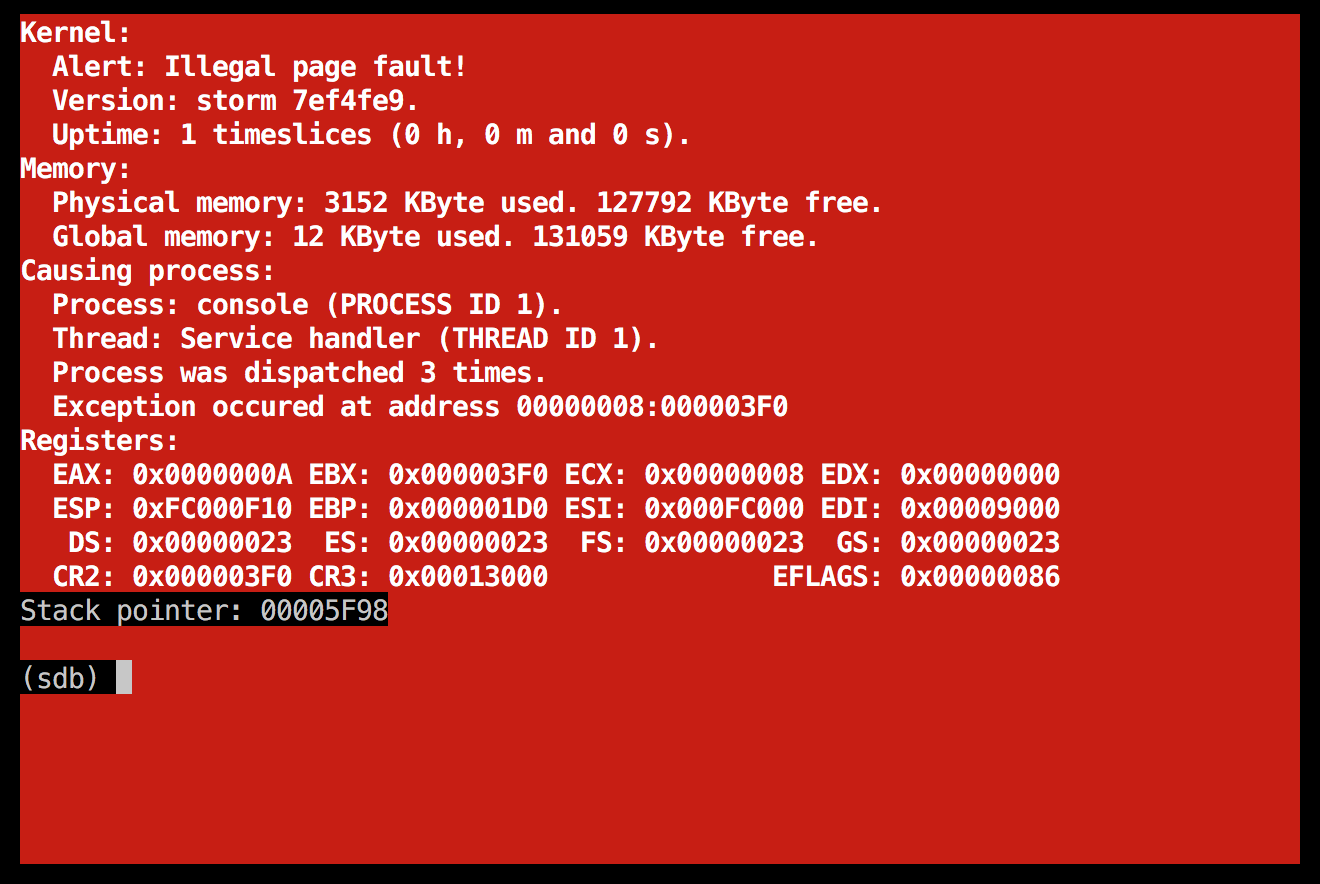

Illegal page fault in the fat process

The EIP address in this case is a kernel-address; that's easily spotted since the kernel runs at 0x100000 and upwards, where our normal user-level processes gets loaded at 0x40000000 and higher. I used my little friend objdump -S once more, and saw that mailbox_receive was at fault this time. More specifically, these lines:

memory_copy(message_parameter->data, mailbox->first_message->data, mailbox->first_message->length);

1110ee: 83 c0 1c add $0x1c,%eax

1110f1: c1 f9 02 sar $0x2,%ecx

1110f4: 89 c6 mov %eax,%esi

1110f6: fc cld

1110f7: f3 a5 rep movsl %ds:(%esi),%es:(%edi) <--- This instruction causes the error.

1110f9: f6 c2 02 test $0x2,%dl

1110fc: 74 02 je 111100 <mailbox_receive+0x2e0>

1110fe: 66 a5 movsw %ds:(%esi),%es:(%edi)

111100: f6 c2 01 test $0x1,%dl

111103: 74 01 je 111106 <mailbox_receive+0x2e6>

111105: a4 movsb %ds:(%esi),%es:(%edi)

So, what do we do now? I could place a gdb breakpoint in trap_page_fault... and see the parameters to the mailbox_receive call by going up a few levels in call stack. Said and done:

vagrant@debian-9rc1-i386:/vagrant$ gdb storm/x86/storm

GNU gdb (Debian 7.12-6) 7.12.0.20161007-git

[...]

warning: No executable has been specified and target does not support

determining executable automatically. Try using the "file" command.

0x0000877d in ?? ()

Reading symbols from storm/x86/storm...done.

(gdb) break trap.c:237

Breakpoint 1 at 0x10909b: file trap.c, line 237.

(gdb) cont

Continuing.

Breakpoint 1, trap_page_fault () at trap.c:237

237 debug_crash_screen("Illegal page fault", current_tss);

(gdb) bt

#0 trap_page_fault () at trap.c:237

#1 0x00000002 in ?? ()

Hmm, not so helpful. This is of course since the trap_page_fault function isn't really called using normal calling conventions, but rather being called as as a "trap" handler, and trap & IRQ handlers don't follow exactly the normal calling conventions => gdb doesn't manage to automatically help us with printing a proper stack trace in this case.

How about printing the current_tss object, does it give us any helpful clues?

(gdb) print *current_tss

$2 = {previous_task_link = 0, u0 = 0, esp0 = 4227862528, ss0 = 16, u1 = 0, esp1 = 0, ss1 = 0, u2 = 0, esp2 = 0, ss2 = 0, u3 = 0,

cr3 = 38555648, eip = 1118455, eflags = 518, eax = 244088860, ecx = 320, edx = 2048, ebx = 243927256, esp = 4227861844, ebp = 46,

esi = 244089628, edi = 1073815552, es = 35, u4 = 0, cs = 8, u5 = 0, ss = 16, u6 = 0, ds = 35, u7 = 0, fs = 35, uint8_t = 0, gs = 35,

u9 = 0, ldt_selector = 0, u10 = 0, t = 0, u11 = 0, iomap_base = 352, process_type = 1, process_id = 5, cluster_id = 0, thread_id = 36,

parent_tss = 0xa000, user_id = 0, priority_process = 0, priority_cluster = 0, priority_thread = 0, stack_pages = 1,

allocated_pages = 20, mutex_kernel = 0x0, mutex_user_id = 0, mutex_time = 34, mailbox_id = 46, state = 0, timeslices = 24,

thread_name = "Handling connection", '\000' <repeats 108 times>, code_base = 4842, data_base = 4846, code_pages = 4, data_pages = 13,

virtual_code_base = 1073745920, virtual_data_base = 1073762304, iomap_size = 0, capability = {modify_services = 0,

modify_hardware = 0, thread_control_others = 0, kill_other_threads = 0}, initialised = 1, instruction_pointer = 0,

process_info = 0xe899198, iomap = 0xe8a7b78 ""}

Nothing obvious comes to mind. It was again midnight, so I had to leave it for now and continue some day later.

Analyzing the page fault further

process_id = 5 it says there, which should be the fat server, which is in turn also confirmed by the screenshot above. So, the fat server tries to receive data from a mailbox, but in doing so it exceeds the boundaries of mapped memory.

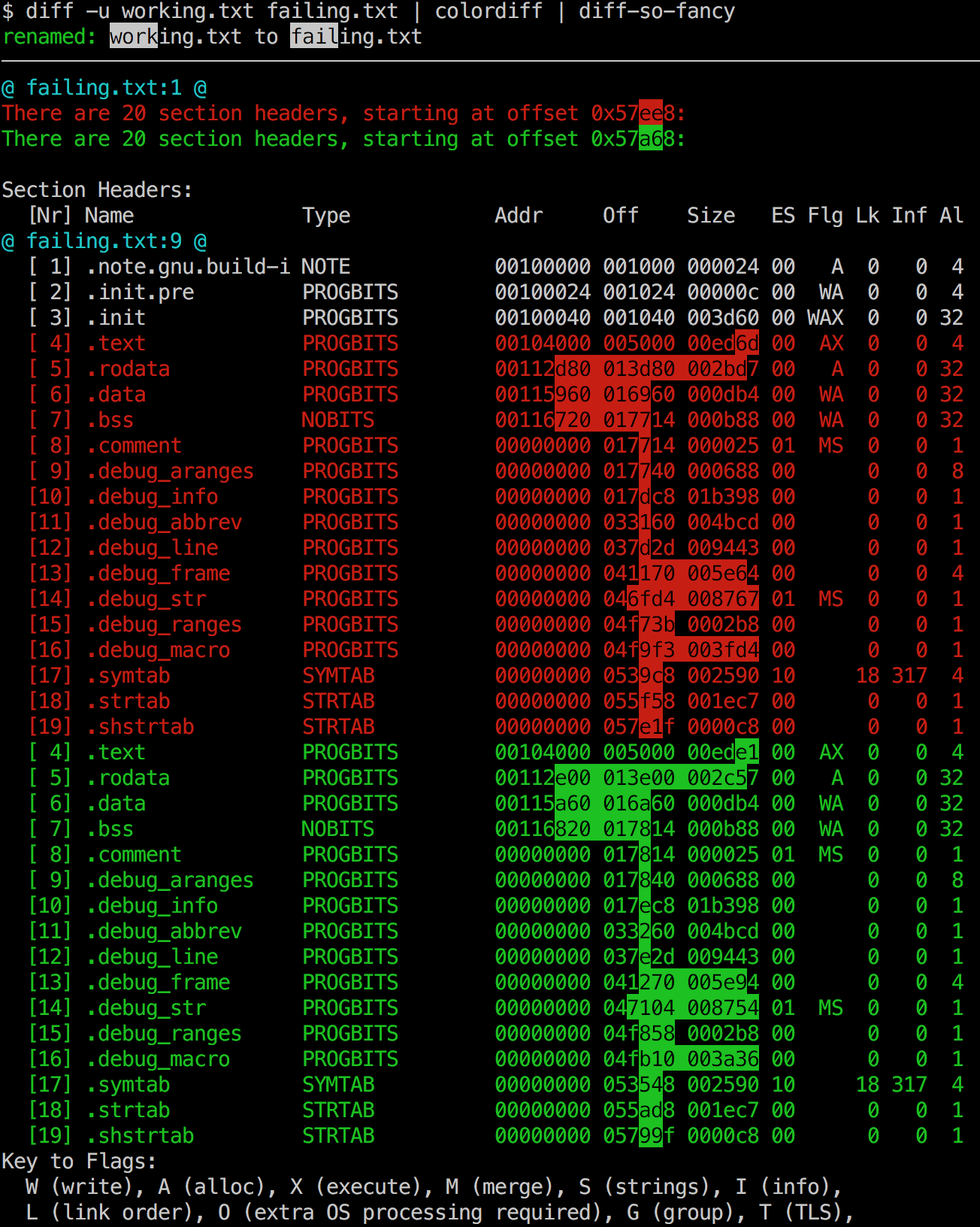

Let's read the ELF sections from the fat server and see what they look like. Again, readelf turns out to be an invaluable debugging tool:

vagrant@debian-9rc1-i386:/vagrant$ readelf -S servers/file_system/fat/fat

There are 18 section headers, starting at offset 0x17a90:

Section Headers:

[Nr] Name Type Addr Off Size ES Flg Lk Inf Al

[ 0] NULL 00000000 000000 000000 00 0 0 0

[ 1] .note.gnu.build-i NOTE 400000d4 0000d4 000024 00 A 0 0 4

[ 2] .eh_frame PROGBITS 400000f8 0000f8 000d18 00 A 0 0 4

[ 3] .eh_frame_hdr PROGBITS 40000e10 000e10 000174 00 A 0 0 4

[ 4] .text PROGBITS 40001000 001000 0038dc 00 AX 0 0 16

[ 5] .data PROGBITS 40005000 005000 00037c 00 WA 0 0 32

[ 6] .bss NOBITS 40005380 00537c 00c588 00 WA 0 0 32

[ 7] .comment PROGBITS 00000000 00537c 000025 01 MS 0 0 1

[ 8] .debug_aranges PROGBITS 00000000 0053a1 000248 00 0 0 1

[ 9] .debug_info PROGBITS 00000000 0055e9 00765c 00 0 0 1

[10] .debug_abbrev PROGBITS 00000000 00cc45 001fe5 00 0 0 1

[11] .debug_line PROGBITS 00000000 00ec2a 002308 00 0 0 1

[12] .debug_str PROGBITS 00000000 010f32 00240e 01 MS 0 0 1

[13] .debug_loc PROGBITS 00000000 013340 003637 00 0 0 1

[14] .debug_ranges PROGBITS 00000000 016977 000530 00 0 0 1

[15] .symtab SYMTAB 00000000 016ea8 000630 10 16 39 4

[16] .strtab STRTAB 00000000 0174d8 0004fc 00 0 0 1

[17] .shstrtab STRTAB 00000000 0179d4 0000b9 00 0 0 1

Key to Flags:

W (write), A (alloc), X (execute), M (merge), S (strings), I (info),

L (link order), O (extra OS processing required), G (group), T (TLS),

C (compressed), x (unknown), o (OS specific), E (exclude),

p (processor specific)

The failing memory access was indicated by the CR2 value in the screenshot above, which had a value of 0x40012000. The section which was highest in memory here was .bss, which had a maximum address of 0x40005380 + 0xc588 = 0x40005fd8 if my calculation is right.

That's obviously quite a few pages below 0x40012000. I wonder if this could be part of the heap for the fat server; doesn't sound quite unlikely. In cases like this, it would probably make sense to have a debug flag to ensure that heap allocations are always starting at 0x50000000 or something, in development mode. It would make it easier to draw fast conclusions about things. Right now, I think we use the approach of trying to make as much as possible of the virtual address space available for user allocations, which is useful to not waste any more virtual address space than absolutely needed, but has the drawback just mentioned.

Before doing anything else here, I wanted to make sure that the boot server hadn't actually managed to read the startup script, and was already continuing with its next task. In other words: just making 100% sure that the problem I am seeing is still related to the reading of the config file. I was about to comment out the rest of the code in the boot server again, but then I saw this line:

log_print_formatted(&log_structure, LOG_URGENCY_DEBUG, "Starting %u programs.", number_of_programs);

This message never gets printed to the log console, so then we can be 100% sure that it never gets that far. Alright, let's look at the mailbox_receive calls it does, and the size of buffers it allocated and that kind of stuff.

Finding and following a trail: read_clusters as a potential candidate for the crash

Hmm. I got an idea. How about this trail, looking closer at this section of the code:

log_print_formatted(

&log_structure,

LOG_URGENCY_DEBUG,

"entry->name: %s",

entry->name

);

read_clusters(

fat_info,

&directory_buffer,

(entry->first_cluster_number_high << 16) +

entry->first_cluster_number_low,

0,

UINT32_MAX

);

The entry->name line is actually the last line that gets printed, and reading one or more FAT clusters is definitely an operation that could fail. Let's try putting a breakpoint right there, in read_clusters.

vagrant@debian-9rc1-i386:/vagrant$ gdb -ex 'file servers/file_system/fat/fat'

GNU gdb (Debian 7.12-6) 7.12.0.20161007-git

[...]

warning: No executable has been specified and target does not support

determining executable automatically. Try using the "file" command.

0x00110350 in ?? ()

A program is being debugged already.

Are you sure you want to change the file? (y or n) y

Reading symbols from servers/file_system/fat/fat...done.

(gdb) break read_clusters

Breakpoint 1 at 0x400010a0: file clusters.c, line 76.

(gdb) cont

Continuing.

Breakpoint 1, read_clusters (fat_info=0x4, output=0xc0cac01a, start_cluster=0, skip=0, number_of_clusters=0) at clusters.c:76

76 {

(gdb) cont

Continuing.

Breakpoint 1, read_clusters (fat_info=0xfc000f14, output=0x40009500 <directory_buffer>, start_cluster=2, skip=0, number_of_clusters=4294967295)

at clusters.c:76

76 {

(gdb) cont

Continuing.

The first time the breakpoint got called was a spurious breakpoint, since all processes share the same start address (which is discussed further in chaos#104.) The second time, however, represents the real problem! How interesting! Let's restart it again (since it crashed) and see if we can step a bit into the read_clusters method. Skipping some of the output here as to not get excessively carried away with the copy & paste, but let's conclude that I stepped a bit further into the place where it would actually do the IPC - namely clusters.c

Debugging this turned out to be a mess, so I ended up trying to fix chaos#104 at this point... It was simply so frustrating trying to put a breakpoint and having it break 10 times for unrelated calls, with garbage function parameters.

Strange! Even after having moved the fat server to a location 32 MiB higher, i.e. 0x42000000, I still get these same strange spurious breakpoints. How about if I move it to a much higher address, say 0x50000000 (256 MiB higher than other binaries)? In line with the "if at first you don't succeed, try again" philosophy. A very sophisticated philosophy indeed. ![]()

Moving it there helped, so it must be that I needed to space the processes a bit further away from each other. Works for me, it's not really an issue right now. This led me to this very interesting debug session! (do I need to say it's past midnight again?)

vagrant@debian-9rc1-i386:/vagrant$ gdb -ex 'file servers/file_system/fat/fat'

GNU gdb (Debian 7.12-6) 7.12.0.20161007-git

[...]

(gdb) break read_single_cluster

Breakpoint 1 at 0x500010f0: read_single_cluster. (2 locations)

(gdb) cont

Continuing.

Breakpoint 1, read_clusters (fat_info=0xfc000f14, output=0x50009500 <directory_buffer>, start_cluster=2, skip=0, number_of_clusters=4294967295)

at clusters.c:90

90 read_single_cluster(fat_info, cluster_number, (void *)

(gdb) print *fat_info

$1 = {sectors_per_cluster = 4, bytes_per_sector = 512, first_data_sector = 100, root_directory_sectors = 32, bytes_per_cluster = 2048, block_structure = {

input_mailbox_id = 46, output_mailbox_id = 47}, fat = 0x50005380 <global_fat>, root = 0x5000d900 <global_root>, bits = 16}

Do you see it? Thank good Lord God for gdb! A good debugger is really an invaluable tool at these times.

The problem is there, right in the number_of_clusters parameter. 4294967295, thats exactly 0xFFFFFFFF (forgive me, I double-checked.) In other words, the highest unsigned 32-bit integer value, and this clearly indicates that we have a bug in the FAT server. It should definitely not try to read 4 billion clusters from the initial ramdisk! And the initial ramdisk shouldn't allow it's receivers to be overflowed this easily. So, bugs in multiple areas of the system was encountered.

A false track: Trying to understand why number_of_clusters had a bogus value

Let's first do the right thing and add checking in the initial_ramdisk, so that you cannot read billions of sectors from a 16 MiB large ramdisk... I started looking at the code but realized that it was already in place:

ipc_block_read_type *ipc_block_read = (ipc_block_read_type *) data;

// Do some boundary checking.

if (ipc_block_read->start_block_number + ipc_block_read->number_of_blocks > ipc_block_info.number_of_blocks)

{

// FIXME: Should we do the error handling in some other way?

message_parameter.data = NULL;

message_parameter.length = 0;

log_print(&log_structure, LOG_URGENCY_ERROR, "Tried to read out of range.");

}

else

{

// ...

}

That looks basically sane. The ipc_block_info.number_of_blocks gets initialized from the value of NUMBER_OF_BLOCKS, which gets defined in an auto-generated header file from the ramdisk. It has a value of 32768.

So, back to the debugger again... We saw that number_of_clusters had a huge, garbage-like value. What did it actually put in the ipc_block_read structure then, that made the error appear?

Let's break in read_clusters again, now that we have a working gdb setup with no spurious breakpoints (that is clearly worth celebrating!), and see what we get. I also changed the CFLAGS a bit here, since gdb felt a bit "jumping around" and the code was compiled with -O3 - in other words, with optimization enabled, which can make the debug experience be sub-par. I changed that to -O0 and added the -ggdb3 flag, to "optimize for debugging" rather than for runtime speed, for now.

It was already late at night (little time for chaos this day, unfortunately) so I was getting tired and getting nowhere. I decided to a simple "check if number_of_clusters > current allowed max" check for now, to then try to take it from that angle instead.

That gave me this output instead. Much nicer than a page fault!

So... did I ever find anything more about the root cause for this strange value? Let's backtrack a bit (by looking at the stacktrace) and see the code that called the read_clusters function. The call was coming from a method called fat_directory_read, from a section that looked like this:

read_clusters(

fat_info,

&directory_buffer,

(entry->first_cluster_number_high << 16) +

entry->first_cluster_number_low,

0,

UINT32_MAX

);

Imagine the look of my face when I saw that piece of code. ![]() Okay. So the fact that it got a -1, a.k.a

Okay. So the fact that it got a -1, a.k.a 0xFFFFFFFF or UINT32_MAX was not that strange... In fact, the read_clusters method is actually annotated with a comment like this:

// Read the contents of the given cluster chain, starting from start_cluster + skip, and going number_of_clusters

// or until the end of the chain, depending on which is encountered first. Returns the cluster

// number where we ended, or U32_MAX if we got to the end of the file/directory/whatever.

So, in other words: the fix I did the other night to try and get rid of the page fault, was completely wrong. A false start. (well, not even a start since I'm already hundreds of lines into this blog post...) I reverted that change; luckily, it hadn't been pushed or even committed yet in this case, which made things a bit simpler.

Back on track: looking at the page fault again

Back to that dreadful page fault then... ![]()

I uncommented some nice debug code that we had left in the fat server since the time when it was originally written, which gave me this interesting output on startup:

(I switched from doing the screenshots with VirtualBox to qemu, if you wonder why it looks a bit different from the previous ones all of a sudden.)

This is now very interesting! Why is it reading cluster 0 over and over again?

Being reminded about the danger of improperly written C code

Let's look at the read_clusters code for a while:

uint32_t read_clusters(fat_info_type *fat_info, void *output, uint32_t start_cluster, uint32_t skip, uint32_t number_of_clusters)

{

uint32_t cluster_number = start_cluster;

uint32_t clusters_read = 0;

do

{

if (skip > 0)

{

skip--;

}

else

{

#ifdef DEBUG

log_print_formatted (

&log_structure,

LOG_URGENCY_DEBUG,

"Reading cluster number %u",

cluster_number

);

#endif

void *data_buffer = (void *) (

(uint32_t) output + (

clusters_read *

fat_info->bytes_per_sector *

fat_info->sectors_per_cluster

)

);

read_single_cluster(fat_info, cluster_number, data_buffer);

clusters_read++;

}

cluster_number = get_next_cluster(cluster_number, fat_info->fat, fat_info->bits);

} while (cluster_number != MAX_uint32_t && clusters_read < number_of_clusters);

return cluster_number;

}

Now, there are some obvious issues here: the fact that the code reads cluster data into a data buffer without any length restrictions whatsoever is clearly very dangerous, and is the reason for why we are running into these issues right now. I mean, this is a classic buffer overrun. We try to write data into a memory location beyond what has been allocated for us, and we can just consider us lucky enough to have paging enabled and the next page in memory not being mapped (which is why we get the page fault.) It would have been much worse if other memory had randomly been overwritten, which could very well have been the case. Scenarios like this is precisely why C is a dangerous language, and why people have invented things like Rust to fix these shortcomings. (Other languages/environments would be worth mentioning as well, like Java and .NET which both have fixed these issues, even though they do not strictly compete about the same "market share".)

C is a fine language if you use it right, but the problem which the function above shows is that it's far too easy for imperfect humans to make simple mistakes that have severe consequences. It can be laziness, it can be "just trying to get it work first, then make it safe", or other good or bad reasons. It doesn't really matter; the language allows us to make these mistakes and there is very little safety built in to the system. For better and worse, but mostly for worse.

So, the design flaw stated above in combination with the get_next_cluster function returning the same cluster over and over again means that we run into this bug.

For now, I will ignore the design flaw, but I will file a GitHub issue about it for later: chaos#105. You know, for a rainy day or something... ![]()

The FAT data seems to be messed up - but why?

I think I will want to step into get_next_cluster now. It seems like the FAT table is pretty messed up, as if we have not been able to read it properly from the initial_ramdisk server or something like that.

Breakpoint 1, get_next_cluster (cluster_number=2, fat=0x480073a0 <global_fat>, bits=16) at clusters.c:13

13 {

(gdb) step

14 switch (bits)

(gdb)

36 fat16_type *fat16 = fat;

(gdb)

37 uint32_t new_cluster_number = fat16[cluster_number];

(gdb)

39 if (new_cluster_number >= FAT16_END_OF_CLUSTER_CHAIN)

(gdb) print new_cluster_number

$1 = 0

Hmm, okay. So cluster 2 refers to cluster zero as its "next cluster". That's a bit weird. What does the rest of fat16 look like?

0x480073a0 <global_fat>: 0x00000000 0x00000000 0x00000000 0x00000000

0x480073b0 <global_fat+16>: 0x00000000 0x00000000 0x00000000 0x00000000

0x480073c0 <global_fat+32>: 0x00000000 0x00000000 0x00000000 0x00000000

0x480073d0 <global_fat+48>: 0x00000000 0x00000000 0x00000000 0x00000000

0x480073e0 <global_fat+64>: 0x00000000 0x00000000 0x00000000 0x00000000

0x480073f0 <global_fat+80>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007400 <global_fat+96>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007410 <global_fat+112>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007420 <global_fat+128>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007430 <global_fat+144>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007440 <global_fat+160>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007450 <global_fat+176>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007460 <global_fat+192>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007470 <global_fat+208>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007480 <global_fat+224>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007490 <global_fat+240>: 0x00000000 0x00000000 0x00000000 0x00000000

0x480074a0 <global_fat+256>: 0x00000000 0x00000000 0x00000000 0x00000000

0x480074b0 <global_fat+272>: 0x00000000 0x00000000 0x00000000 0x00000000

0x480074c0 <global_fat+288>: 0x00000000 0x00000000 0x00000000 0x00000000

0x480074d0 <global_fat+304>: 0x00000000 0x00000000 0x00000000 0x00000000

0x480074e0 <global_fat+320>: 0x00000000 0x00000000 0x00000000 0x00000000

0x480074f0 <global_fat+336>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007500 <global_fat+352>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007510 <global_fat+368>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007520 <global_fat+384>: 0x00000000 0x00000000 0x00000000 0x00000000

0x48007530 <global_fat+400>: 0x00000000 0x00000000 0x00000000 0x00000000

I'm not an expert of the FAT file system by any means, but that does clearly not look very correct. ![]() A bunch of zeroes like this more looks like a piece of uninitialized data than a FAT table.

A bunch of zeroes like this more looks like a piece of uninitialized data than a FAT table.

I looked in the detect_fat.c file, and concluded that it had very little (in fact, none at all!) handling of cases when the ipc_send or ipc_receive failed, so I added that. Maybe it's trying to read the global_fat from the initial_ramdisk, but for whatever reason failing and because it doesn't check the return values properly it could be that it just goes on and continues to run, with garbage data... (again, it's too easy to "cheat" with things like this in C which this real-world scenario shows plainly)

With that in place, I still got the page fault on startup. Interesting! I decided to change the reading of the global_fat bit like this:

memory_set_uint32_t((uint32_t *) &global_fat, 0xDEADBEEF, 16384 / sizeof(uint32_t));

message_parameter.length = 16384;

message_parameter.data = &global_fat;

if (ipc_receive(fat_info->block_structure.input_mailbox_id, &message_parameter, NULL) != IPC_RETURN_SUCCESS)

It would previously initialize the global_fat with zeroes, but I thought it could be better to put a more uncommon value there. And 0xDEADBEEF is a nice hex value, used elsewhere as well, but not yet in chaos. ![]()

Interesting, I still got the same behavior, and it seems to be reading cluster 0 over and over again... Could it be the sending of the cluster from the initial ramdisk that is broken somehow? I have changed it a bit; previously the initial ramdisk was a 1.44 MiB floppy image or something, but it was changed to a 16 MiB image a while ago to be able to fit in more servers (and hopefully Quake some day. ![]() ) So, the preconditions are somewhat different to what they used to be, but still... Very strange indeed.

) So, the preconditions are somewhat different to what they used to be, but still... Very strange indeed.

Looking at data being sent from the initial_ramdisk server

I put a breakpoint at the ipc_send line in initial_ramdisk.c, to be able to look at the data being sent to the FAT server. It looked completely sane:

(gdb) x/500b message_parameter->data

0x50001270 <ramdisk>: 0xeb 0x3c 0x90 0x6d 0x6b 0x66 0x73 0x2e

0x50001278 <ramdisk+8>: 0x66 0x61 0x74 0x00 0x02 0x04 0x04 0x00

0x50001280 <ramdisk+16>: 0x02 0x00 0x02 0x00 0x80 0xf8 0x20 0x00

0x50001288 <ramdisk+24>: 0x20 0x00 0x40 0x00 0x00 0x00 0x00 0x00

0x50001290 <ramdisk+32>: 0x00 0x00 0x00 0x00 0x80 0x00 0x29 0x20

0x50001298 <ramdisk+40>: 0xf5 0x0c 0xf5 0x4e 0x4f 0x20 0x4e 0x41

0x500012a0 <ramdisk+48>: 0x4d 0x45 0x20 0x20 0x20 0x20 0x46 0x41

This is not a full sector of 0x00 which I was fearing. I continued the session and let it break again on the next ipc_send call:

(gdb) x/500b message_parameter->data

0x50001470 <ramdisk+512>: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x50001478 <ramdisk+520>: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x50001480 <ramdisk+528>: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x50001488 <ramdisk+536>: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x50001490 <ramdisk+544>: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

Now this if finally interesting! A sector of zeroes; if I let it continue now it will probably give me these "reading cluster number 0" lines.

Of course, this is again a symptom of an underlying problem; the FAT server performs far too little error checking and validation of data it reads, which is why it just keeps going even when it has read this completely broken FAT sector from the initial ramdisk. (Man, software engineering is hard! You can get it working in the "sunny day" cases fairly easily, but making it really work and have proper error handling etc... that takes a lot of effort!)

I looked a bit more to see which blocks it was trying to read. The first call looked like this:

(gdb) p *ipc_block_read

$1 = {start_block_number = 0, number_of_blocks = 1}

The subsequent like this:

(gdb) p *ipc_block_read

$2 = {start_block_number = 1, number_of_blocks = 32}

(gdb) p *ipc_block_read

$3 = {start_block_number = 68, number_of_blocks = 32}

(gdb) p *ipc_block_read

$4 = {start_block_number = 100, number_of_blocks = 4}

(gdb) p *ipc_block_read

$5 = {start_block_number = 92, number_of_blocks = 4}

(gdb) p *ipc_block_read

$6 = {start_block_number = 92, number_of_blocks = 4}

(gdb) p *ipc_block_read

$7 = {start_block_number = 92, number_of_blocks = 4}

...

So... The reading of 32 blocks starting from block number 1 gives a very weird result. The block size, as given from ipc_block_info, should be 512 bytes in this case:

(gdb) p ipc_block_info

$10 = {block_size = 512, number_of_blocks = 32768, writable = 1, readable = 1, label = "Initial ramdisk 0.0.1", '\000' <repeats 42 times>}

Investigating the data in the actual ramdisk image

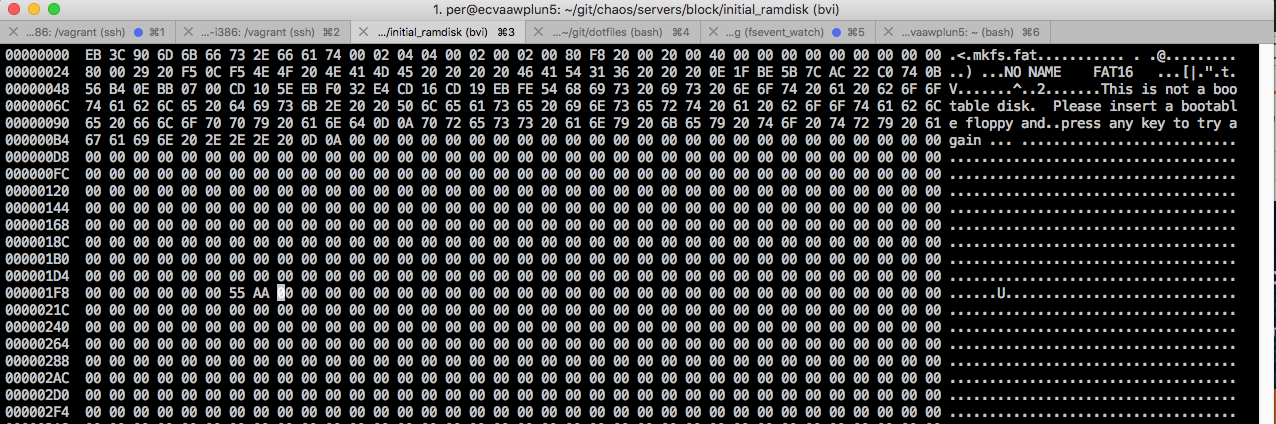

Let's look at the ramdisk image, shall we (using the tool bvi):

The cursor position is exactly at 0x200, i.e. the second sector = block number 1 (zero-indexed). And it does indeed look like it's pretty much zeroed out...

So, where is this call coming from? I think I'll need to put a breakpoint in the fat server, where it's reading the file, to track down the ipc_send calls from that end. Since the initial_ramdisk is running in its own address space as a separate process, I can unfortunately not just look at the call stack... (I can see very clear reasons why multi-process based operating systems aren't more popular than they are, to be honest. They are a pain to debug sometimes; monolithic systems like Linux have a much simpler architecture.)

I started debugging this with a breakpoint in fat_file_get_info, and then something wonderful happened. I stepped through the code for a while, and found myself with the debugger at one half of the screen, and navigating through the code ("go to definition") in the editor at the other half of the screen. The IDE experience in that sense was nice! I could look at the program while it was running in the terminal, and jump around in the code easily in the other window - without the computer being slow, sluggish etc. which it often feels otherwise these days... Anyway, it felt nice!

I kept digging. I learned that a FAT directory entry is 32 bytes long, thank you for that GDB!

(gdb) print sizeof(fat_entry_type)

$7 = 32

By looking at the hex dump of the fat_entry data, it's quite clear that this FAT root directory contains one single entry (which makes complete sense, given that it is supposed to contain a single config folder. Let's print it out as strings and we should see it ourselves!

get_entry_by_name (fat_entry=0x4800b5a0 <directory_buffer>, name=0x7d7001 "CONFIG") at get_entry_by_name.c:15

15 {

(gdb) x/8xs fat_entry

0x4800b5a0 <directory_buffer>: "CONFIG \020\b"

0x4800b5ae <directory_buffer+14>: "\270\212MKMK"

0x4800b5b5 <directory_buffer+21>: ""

0x4800b5b6 <directory_buffer+22>: "\270\212MK\002"

0x4800b5bc <directory_buffer+28>: ""

0x4800b5bd <directory_buffer+29>: ""

0x4800b5be <directory_buffer+30>: ""

0x4800b5bf <directory_buffer+31>: ""

The entry right after is completely empty as can be seen here:

(gdb) x/32xb &fat_entry[1]

0x4800b5c0 <directory_buffer+32>: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x4800b5c8 <directory_buffer+40>: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x4800b5d0 <directory_buffer+48>: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

0x4800b5d8 <directory_buffer+56>: 0x00 0x00 0x00 0x00 0x00 0x00 0x00 0x00

I continued stepping through the code, things looked basically sane all the way until it should read the clusters, using this code:

read_clusters(

fat_info,

&directory_buffer,

(entry->first_cluster_number_high << 16) +

entry->first_cluster_number_low,

0,

UINT32_MAX

);

The entry also looked fine; in this case, the directory data would be located at the second cluster, from what I could tell.

(gdb) print *entry

$16 = {name = "CONFIG\000 ", extension = "\000 ", read_only = 0 '\000', hidden = 0 '\000', system = 0 '\000', volume_id = 0 '\000', directory = 1 '\001',

archive = 0 '\000', reserved = 0 '\000', nt_reserved = 8 '\b', creation_hundreds = 0 '\000', creation_time = {hours = 24, minutes = 21, seconds = 17},

creation_date = {year = 77, month = 6, day = 9}, access_date = {year = 77, month = 6, day = 9}, first_cluster_number_high = 0, update_time = {

hours = 24, minutes = 21, seconds = 17}, update_date = {year = 77, month = 6, day = 9}, first_cluster_number_low = 2, file_size = 0,

next = 0x4800b5c0 <directory_buffer+32> ""}

A closer look at the FAT directory entries

I continued a while more, stepping in the fat server and eventually switching over to place a breakpoint in the initial_ramdisk server for the reading of block 100. This also looked pretty fine actually. I placed a breakpoint right at the end of the reading inside initial_ramdisk.c, line 95 and printed the contents of the data it was returning to the caller:

(gdb) x/30xs message_parameter->data

0x5000da70 <ramdisk+51200>: ". \020"

0x5000da7d <ramdisk+51213>: ""

0x5000da7e <ramdisk+51214>: "\270\212MKMK"

0x5000da85 <ramdisk+51221>: ""

0x5000da86 <ramdisk+51222>: "\270\212MK\002"

0x5000da8c <ramdisk+51228>: ""

0x5000da8d <ramdisk+51229>: ""

0x5000da8e <ramdisk+51230>: ""

0x5000da8f <ramdisk+51231>: ""

0x5000da90 <ramdisk+51232>: ".. \020"

0x5000da9d <ramdisk+51245>: ""

0x5000da9e <ramdisk+51246>: "\270\212MKMK"

0x5000daa5 <ramdisk+51253>: ""

0x5000daa6 <ramdisk+51254>: "\270\212MK"

0x5000daab <ramdisk+51259>: ""

0x5000daac <ramdisk+51260>: ""

0x5000daad <ramdisk+51261>: ""

0x5000daae <ramdisk+51262>: ""

0x5000daaf <ramdisk+51263>: ""

0x5000dab0 <ramdisk+51264>: "SERVERS \020\b"

0x5000dabe <ramdisk+51278>: "\270\212MKMK"

0x5000dac5 <ramdisk+51285>: ""

0x5000dac6 <ramdisk+51286>: "\270\212MK\003"

0x5000dacc <ramdisk+51292>: ""

0x5000dacd <ramdisk+51293>: ""

0x5000dace <ramdisk+51294>: ""

0x5000dacf <ramdisk+51295>: ""

0x5000dad0 <ramdisk+51296>: ""

0x5000dad1 <ramdisk+51297>: ""

0x5000dad2 <ramdisk+51298>: ""

This might not be obvious for everyone, but I see three file entries here:

-

.- i.e. the current working directory. -

..- the parent directory. -

SERVERS- the folder we're looking for.

It felt like I was getting closer here. I switched back the file being debugged to servers/file_system/fat/fat, and meticulously stepped one instruction at a time into the kernel (since I could only have the symbols for one single ELF image loaded at a time, I couldn't just step out of the function - GDB knew too little about the code being debugged in this case) and hopefully, eventually back to the 0x4800xxxx address space...

(gdb) stepi

0x50001178 in ?? ()

(gdb) stepi

0x5000117c in ?? ()

(gdb)

0x5000117d in ?? ()

(gdb)

0x5000117e in ?? ()

(gdb)

0x51001610 in ?? ()

(gdb)

0x51001614 in ?? ()

(gdb)

0x51001618 in ?? ()

(gdb)

0x51001619 in ?? ()

(gdb)

0x5100161a in ?? ()

(gdb)

0x0010a430 in ?? ()

(gdb)

0x0010a431 in ?? ()

(many lines omitted) This was extremely tedious, but I felt I was so close now that I didn't want to let go of it! Yeah, I might be able to just restart and get back to the current state, but... I didn't want to do that.

It never seemed to get done. Weird! Maybe the fact that the debugger was attached was preventing the interrupts from being raised or something. I gave up for now and just let it continue, probably crashing.

Crashing it did. Let's reboot the VM and see if we can get back into this state... We see that it managed to read the root folder, then the config folder also seemingly.

I quite easily managed to get it to the state where it was crashed; I was now back into the middle of the read_clusters function again. I saw that it was calling a method named get_next_cluster; it is mentioned earlier in this blog post. My suspicion right now is that it only works properly with FAT12 volumes; since that's all we've been testing it with, and we don't have any unit or integration tests that validates it works correctly on both FAT12 and FAT16... we can't really expect it to work that well, can we? (This is a reminder to myself and everyone else: don't skip writing tests. You are only hurting yourself and your peers in the long run. You believe you are saving time right now, but you're not.)

Finding a bug in the FAT16 implementation: incorrect assumptions

I continued the digging and came back to the global_fat, also mentioned quite a lot earlier here. I started looking into how that structure was being populated. Something like this:

message_parameter.data = &ipc_block_read;

message_parameter.length = sizeof(ipc_block_read_type);

ipc_block_read.start_block_number = 1;

ipc_block_read.number_of_blocks = bios_parameter_block->fat_size_16;

if (ipc_send(fat_info->block_structure.output_mailbox_id, &message_parameter) != IPC_RETURN_SUCCESS)

{

// ...

Okay, so exactly here is the problem. The start block number is 1. We already saw, in the hexdump a while ago, that the second sector in this volume contains all zeroes. So maybe the location of the first FAT cannot be hardwired like that always? I looked at a document describing the FAT format:

1.1 Layout

First the boot sector (at relative address 0), and possibly other stuff. Together these are the Reserved Sectors. Usually the boot sector is the only reserved sector.

Then the FATs (following the reserved sectors; the number of reserved sectors is given in the boot sector, bytes 14-15; the length of a sector is found in the boot sector, bytes 11-12).

That's interesting. Let's look at bytes 14-15 in the boot sector again (highlighted with stars below):

** **

00000000 EB 3C 90 6D 6B 66 73 2E 66 61 74 00 02 04 04 00 02 00 02 00 80 F8 20 00 20 00 40 00 00 00 00 00 00 00 00 00 .<.mkfs.fat........... . .@.........

00000024 80 00 29 20 F5 0C F5 4E 4F 20 4E 41 4D 45 20 20 20 20 46 41 54 31 36 20 20 20 0E 1F BE 5B 7C AC 22 C0 74 0B ..) ...NO NAME FAT16 ...[|.".t.

00000048 56 B4 0E BB 07 00 CD 10 5E EB F0 32 E4 CD 16 CD 19 EB FE 54 68 69 73 20 69 73 20 6E 6F 74 20 61 20 62 6F 6F V.......^..2.......This is not a boo

0000006C 74 61 62 6C 65 20 64 69 73 6B 2E 20 20 50 6C 65 61 73 65 20 69 6E 73 65 72 74 20 61 20 62 6F 6F 74 61 62 6C table disk. Please insert a bootabl

00000090 65 20 66 6C 6F 70 70 79 20 61 6E 64 0D 0A 70 72 65 73 73 20 61 6E 79 20 6B 65 79 20 74 6F 20 74 72 79 20 61 e floppy and..press any key to try a

000000B4 67 61 69 6E 20 2E 2E 2E 20 0D 0A 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 gain ... ...........................

Heureka! We have it! The problem is that the code made stupid, incorrect assumptions that might have worked on 1.44 MiB floppy images years ago, being formatted with the mtools mformat program or similar. Anyway, it is a bad and stupid assumption and we should fix that now, to sort out the bug.

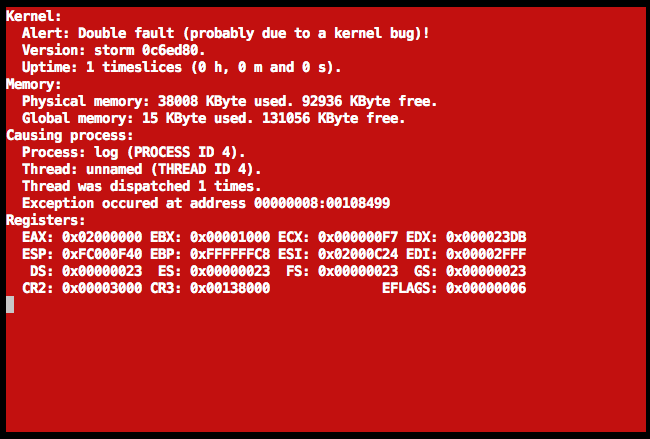

Illegal page fault in the boot server

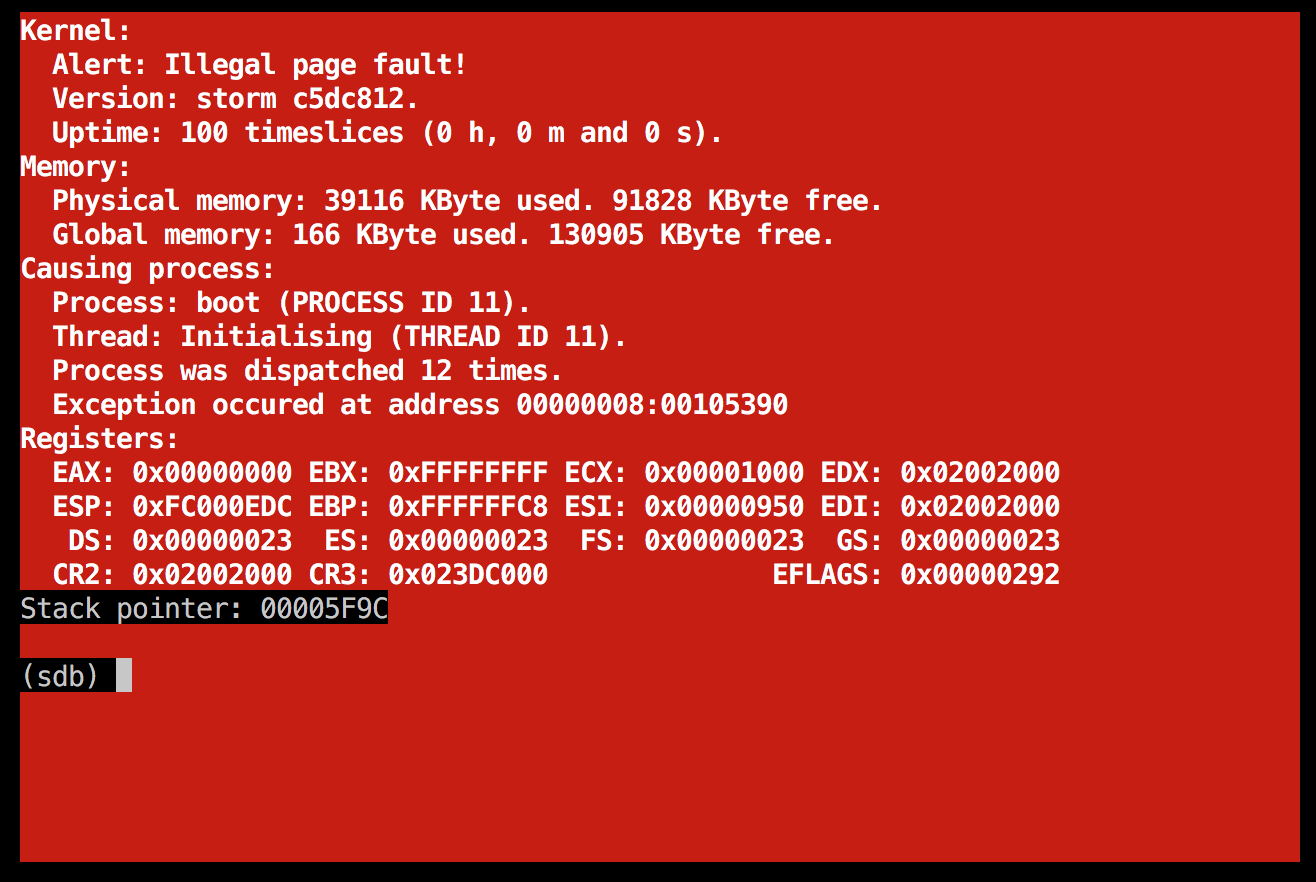

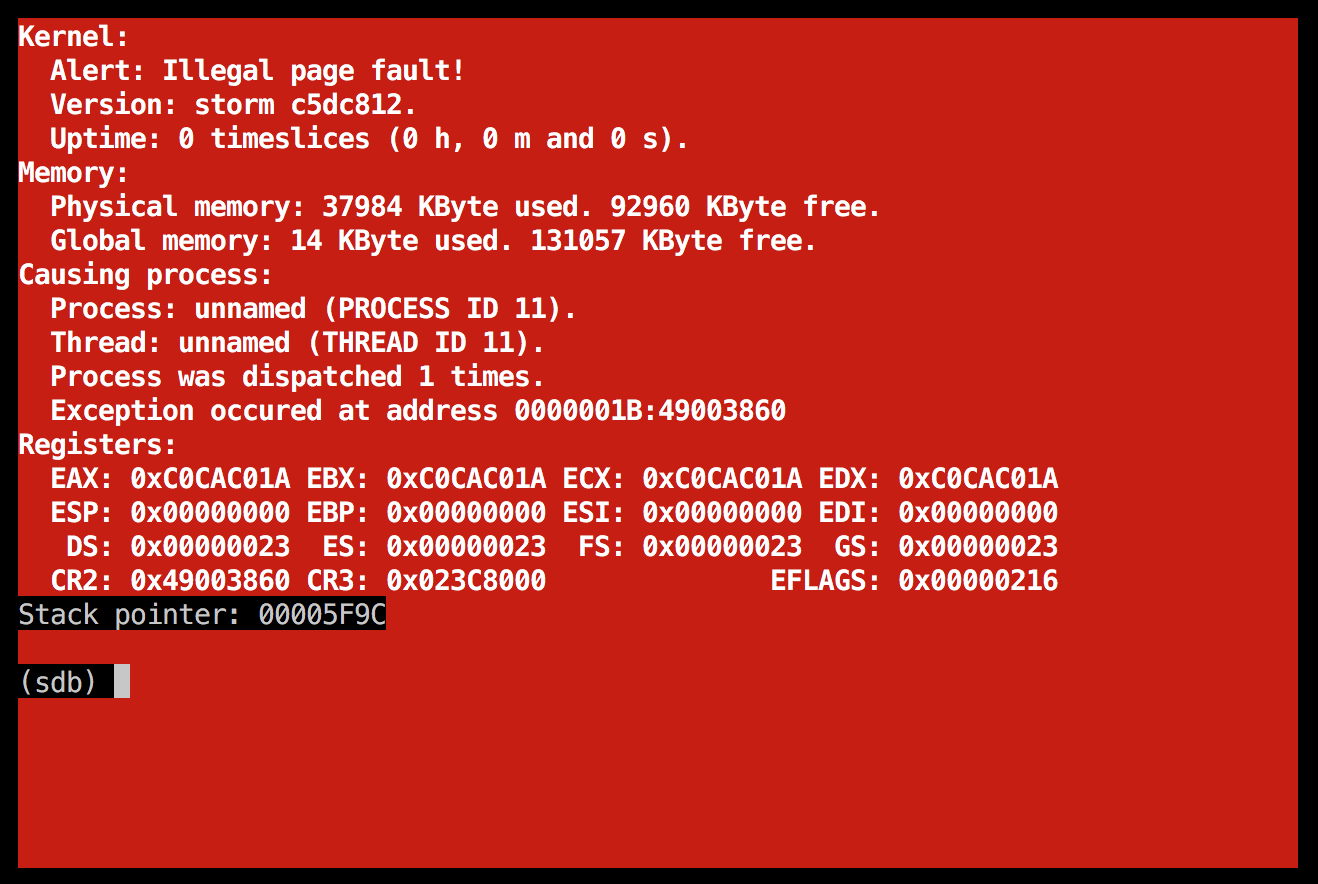

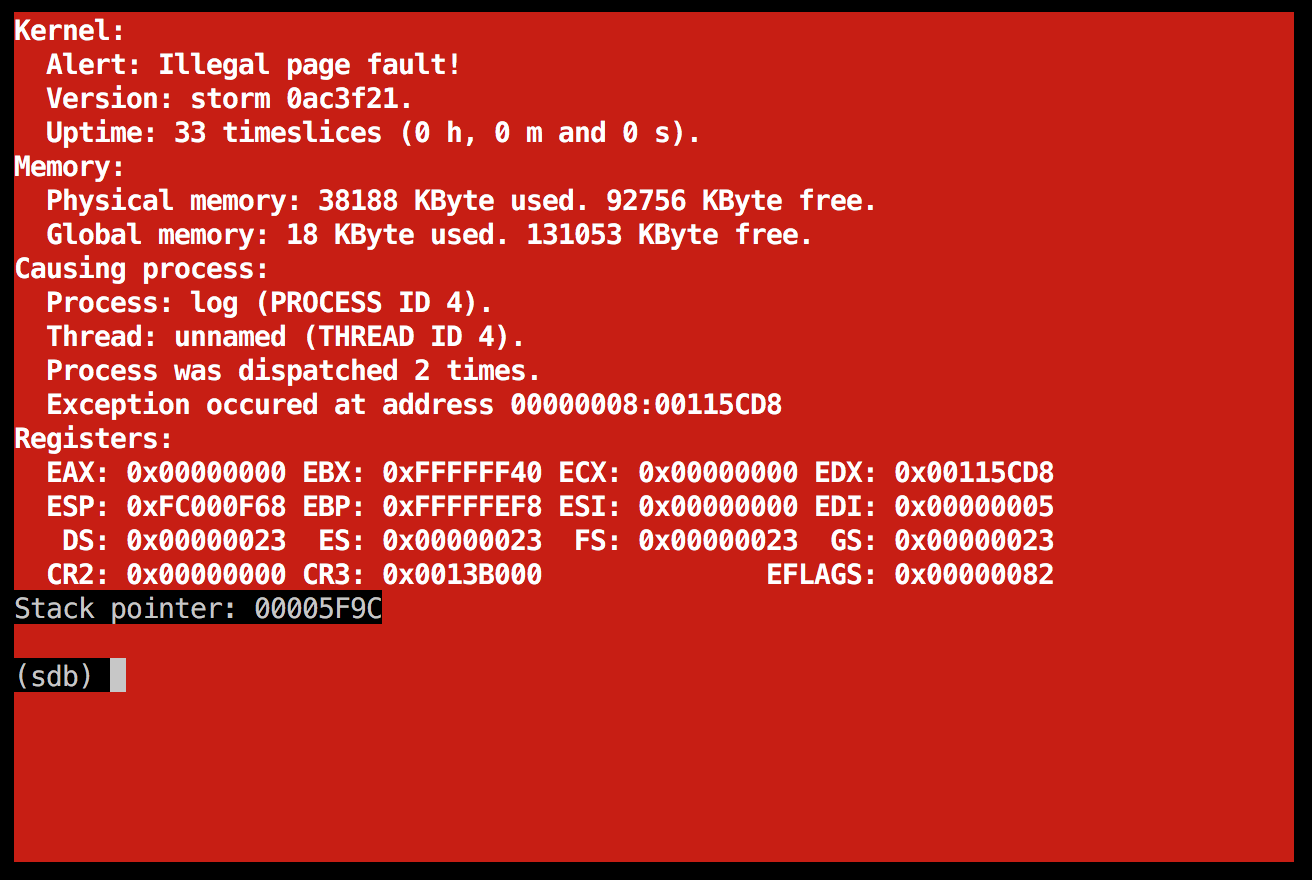

After fixing that, I ran into the next problem.

Isn't life great to you sometimes? ![]() I looked at the stack trace - now it seems to be crashing later on:

I looked at the stack trace - now it seems to be crashing later on:

400013cf <parse_program_list>:

static unsigned int parse_program_list(unsigned int file_size)

{

400013cf: 83 ec 10 sub $0x10,%esp

unsigned int number_of_programs = 0;

400013d2: c7 44 24 0c 00 00 00 movl $0x0,0xc(%esp)

400013d9: 00

programs[number_of_programs++] = &program_list_buffer[0];

400013da: 8b 44 24 0c mov 0xc(%esp),%eax

400013de: 8d 50 01 lea 0x1(%eax),%edx

400013e1: 89 54 24 0c mov %edx,0xc(%esp)

400013e5: 8b 15 40 44 00 40 mov 0x40004440,%edx

400013eb: 89 14 85 60 44 00 40 mov %edx,0x40004460(,%eax,4)

// Convert the LF-terminated file into a buffer o NUL-terminated strings.

for (unsigned int i = 1; i < file_size; i++)

400013f2: c7 44 24 08 01 00 00 movl $0x1,0x8(%esp)

400013f9: 00

400013fa: eb 52 jmp 4000144e <parse_program_list+0x7f>

{

if (program_list_buffer[i] == '\n')

400013fc: 8b 15 40 44 00 40 mov 0x40004440,%edx

40001402: 8b 44 24 08 mov 0x8(%esp),%eax

40001406: 01 d0 add %edx,%eax

40001408: 0f b6 00 movzbl (%eax),%eax

4000140b: 3c 0a cmp $0xa,%al

4000140d: 75 3a jne 40001449 <parse_program_list+0x7a>

Let's make a new breakpoint at parse_program_list and see what we get; perhaps we even have some file content? That would be completely awesome!

(gdb) break parse_program_list

Breakpoint 1 at 0x400013cf: file boot.c, line 168.

(gdb) cont

Continuing.

Breakpoint 1, parse_program_list (file_size=1073762304) at boot.c:168

168 {

(gdb) info locals

number_of_programs = 1073746895

Hmm. ![]() I don't think the file is really one gigabyte large. Something has corrupted things earlier on. Maybe it's again a case of lack of proper error handling. Let's go back to the

I don't think the file is really one gigabyte large. Something has corrupted things earlier on. Maybe it's again a case of lack of proper error handling. Let's go back to the read_program_list method and debug that one a bit more.

if (file_get_info(&vfs_structure, &directory_entry) != FILE_RETURN_SUCCESS)

{

log_print(&log_structure, LOG_URGENCY_ERROR, STARTUP_FILE " not found.");

return FALSE;

}

The directory_entry looks sane after file_get_info. Both the path name and the size field has the expected values, which is nice!

(gdb) p directory_entry

$3 = {path_name = "\000CONFIG\000SERVERS\000BOOT\000STARTUP\000\000STARTUP", '\000' <repeats 771 times>..., success = 1, type = 1, time = 4294967296,

size = 16}

Hmm, this looked weird:

(gdb) p program_list_buffer

$4 = 0x676f7270 <error: Cannot access memory at address 0x676f7270>

(gdb) p *program_list_buffer

Cannot access memory at address 0x676f7270

Either it's a problem with the debugging session or I'm on to something here for real. I noted that we had a dangerous memory allocation where the return value was never checked:

memory_allocate((void **) &program_list_buffer, directory_entry.size);

However, if this allocation fails, and the pointer is never initialized, wouldn't it crash also in the VM? Anyway, I fixed it now so that we verify that the allocation actually was successful before continuing.

It would still unfortunately crash, but now I saw something interesting:

CR2: 0x676F7271

Do you see it? This value is incredibly close to 0x676f7270 listed above.

The EIP address seemed to be in start_programs now during the crash. I decided to comment out that call completely and reboot. It would still crash, unfortunately. I moved the boot server to a unique virtual address to be able to get a better gdb experience and avoid these spurious breakpoints from other processes that are so annoying.

Finding a clear bug: overwriting a pointer variable when reading from the file

Ahh.... THIS was an annoying one:

if (file_read(&vfs_structure, handle, directory_entry.size, &program_list_buffer) != FILE_RETURN_SUCCESS)

{

log_print(&log_structure, LOG_URGENCY_ERROR, "Failed reading from " STARTUP_FILE);

return FALSE;

}

This is wrong. The program_list_buffer is already a pointer, so &program_list_buffer will read the file into the variable that is the pointer instead of the memory being pointed to. A seemingly small but very significant difference... Strange that it didn't crash completely, but we must have been "lucky" enough to not overflow the physical pages actually allocated and mapped for this process.

Things like this make you realize why people think C is such a bad language. Yes, it is indeed. It's beautiful in certain areas, but horrible in others. The existence of void * is one such thing; it's a horrible pointer type that can be cast to/from other pointer types without warnings or errors. But, in this case you could actually argue that file_read shouldn't take a void * as its argument but rather uint8_t *. That way, we would have get a compilation warning, since char ** can be silently converted to void * but not to uint8_t *. I think we'll change it now anyway.

(I wondered whether this was a regression introduced in my recent refactoring of the boot server code, so I looked at the original code. The code seemed completely wrong, so... I'm not so convinced yet.)

After the change, this is what you get now when compiling:

Compiling boot...

boot.c boot.c: In function 'read_program_list':

boot.c:160:65: error: passing argument 4 of 'file_read' from incompatible pointer type [-Werror=incompatible-pointer-types]

if (file_read(&vfs_structure, handle, directory_entry.size, &program_list_buffer) != FILE_RETURN_SUCCESS)

^

In file included from /vagrant/servers/../libraries/file/file.h:12:0,

from config.h:15,

from boot.c:7:

/vagrant/servers/../libraries/file/functions.h:15:20: note: expected 'uint8_t * {aka unsigned char *}' but argument is of type 'char **'

extern return_type file_read(ipc_structure_type *vfs_structure, file_handle_type file_handle, unsigned int length, uint8_t *buffer);

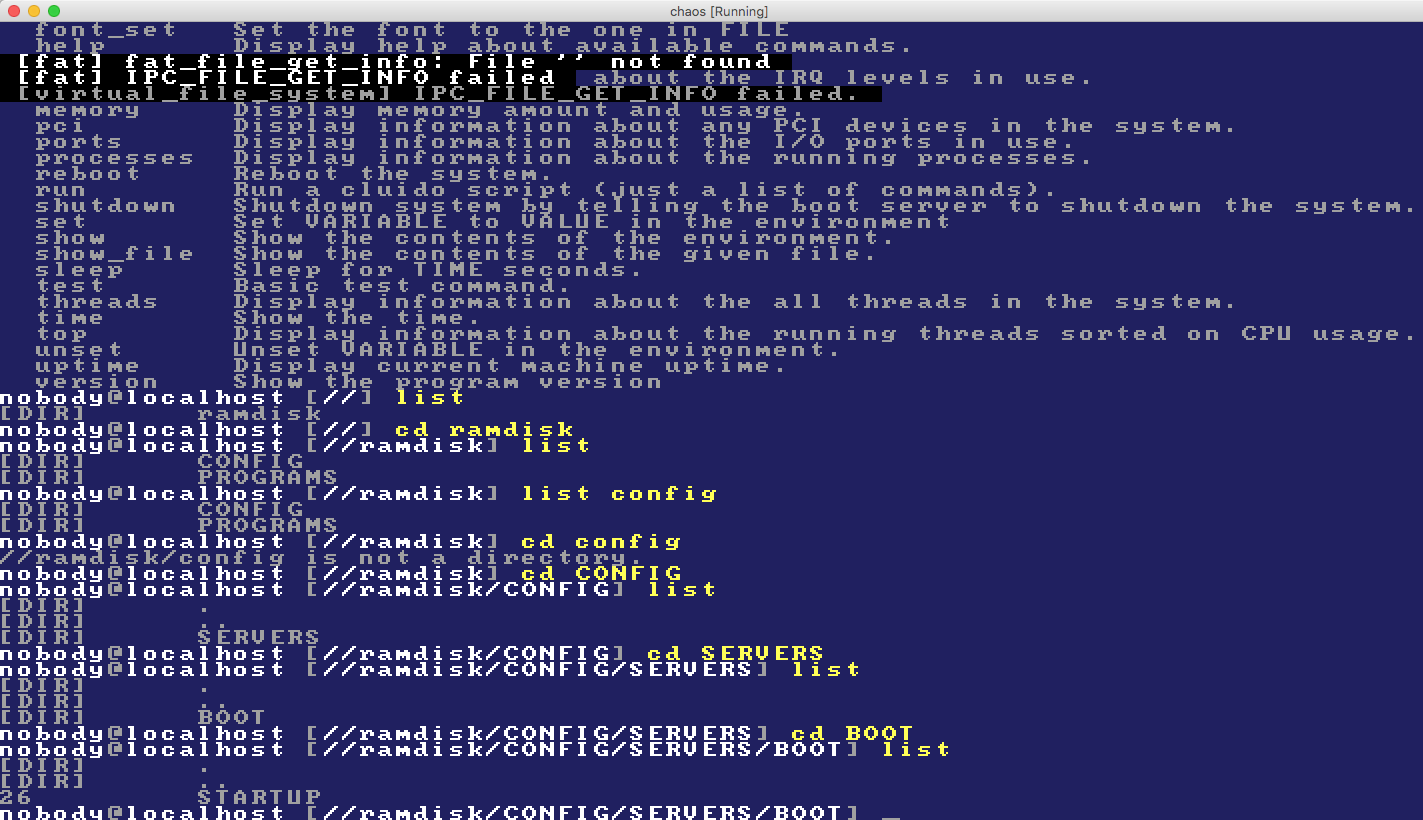

Much better like that! And you know what: after fixing that final bug, this is what we have now:

I think we're really getting there now. Let's disable the excessive logging, and also take back the "start programs" code that is uncommented now and see what we'll get.

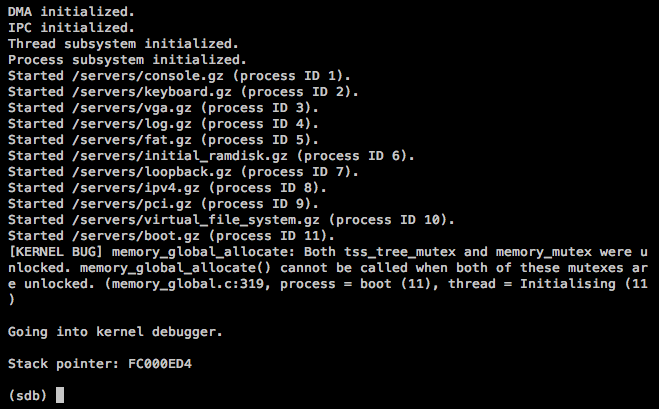

A new error message: programs/cluido could not be accessed

This is what the startup screen looked like:

Much better than before! This might even be a correct error, since the cluido binary is never copied into the ramdisk. Let's add that code (in the programs/Rakefile) and see what we will get!

Strangely enough, it did not make any difference; the files are now there in the ramdisk but I still get the same output on bootup:

vagrant@debian-9rc1-i386:/vagrant$ mdir -s u:

Volume in drive U has no label

Volume Serial Number is 00D4-080D

Directory for U:/

config <DIR> 2017-10-29 5:32

programs <DIR> 2017-10-29 5:32

2 files 0 bytes

Directory for U:/config

. <DIR> 2017-10-29 5:32

.. <DIR> 2017-10-29 5:32

servers <DIR> 2017-10-29 5:32

3 files 0 bytes

Directory for U:/config/servers

. <DIR> 2017-10-29 5:32

.. <DIR> 2017-10-29 5:32

boot <DIR> 2017-10-29 5:32

3 files 0 bytes

Directory for U:/config/servers/boot

. <DIR> 2017-10-29 5:32

.. <DIR> 2017-10-29 5:32

startup 16 2017-10-29 5:32

3 files 16 bytes

Directory for U:/programs

. <DIR> 2017-10-29 5:32

.. <DIR> 2017-10-29 5:32

cluido 211260 2017-10-29 5:32

3 files 211 260 bytes

Total files listed:

14 files 211 276 bytes

16 502 784 bytes free

Unintentionally case sensitive FAT implementation?

I wonder if the problem is with the casing, and the previous theory about the FAT code being case sensitive right now being correct? Let's verify that by changing the boot server back to read the startup script with lowercase letters and see what happens on bootup.

Indeed, that seems to be it. I filed a bug about it - chaos#107; it seems to be mtools that have changed their semantics since we wrote our code almost 20 years ago... ![]()

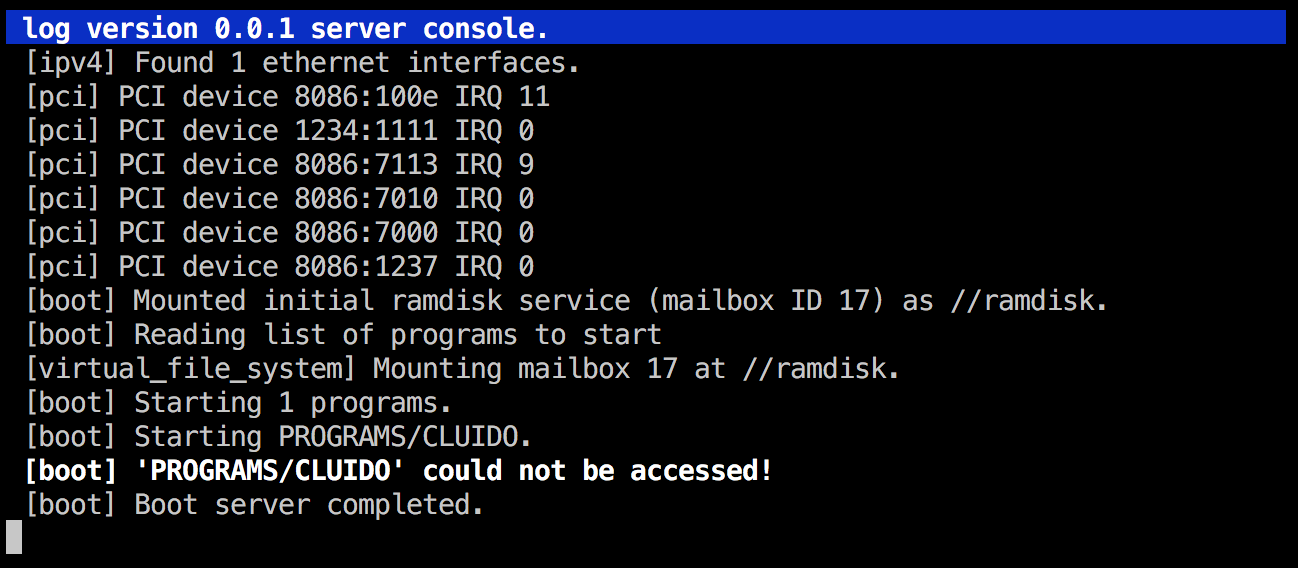

After switching the boot server back to uppercase, and also fixing the cluido path in the startup script, I got this:

Hmmm.... What new bug do we have now? I got an idea: could it be that the file names in the startup script are currently expected to be a fully qualified path, i.e. //ramdisk/PROGRAMS/CLUIDO. Let's try with that!

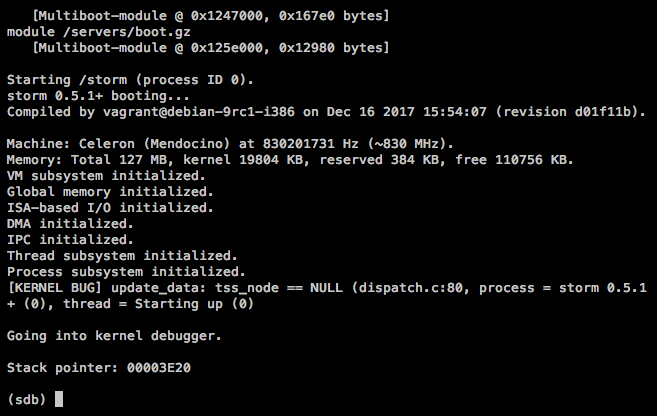

Another illegal page fault in the boot server

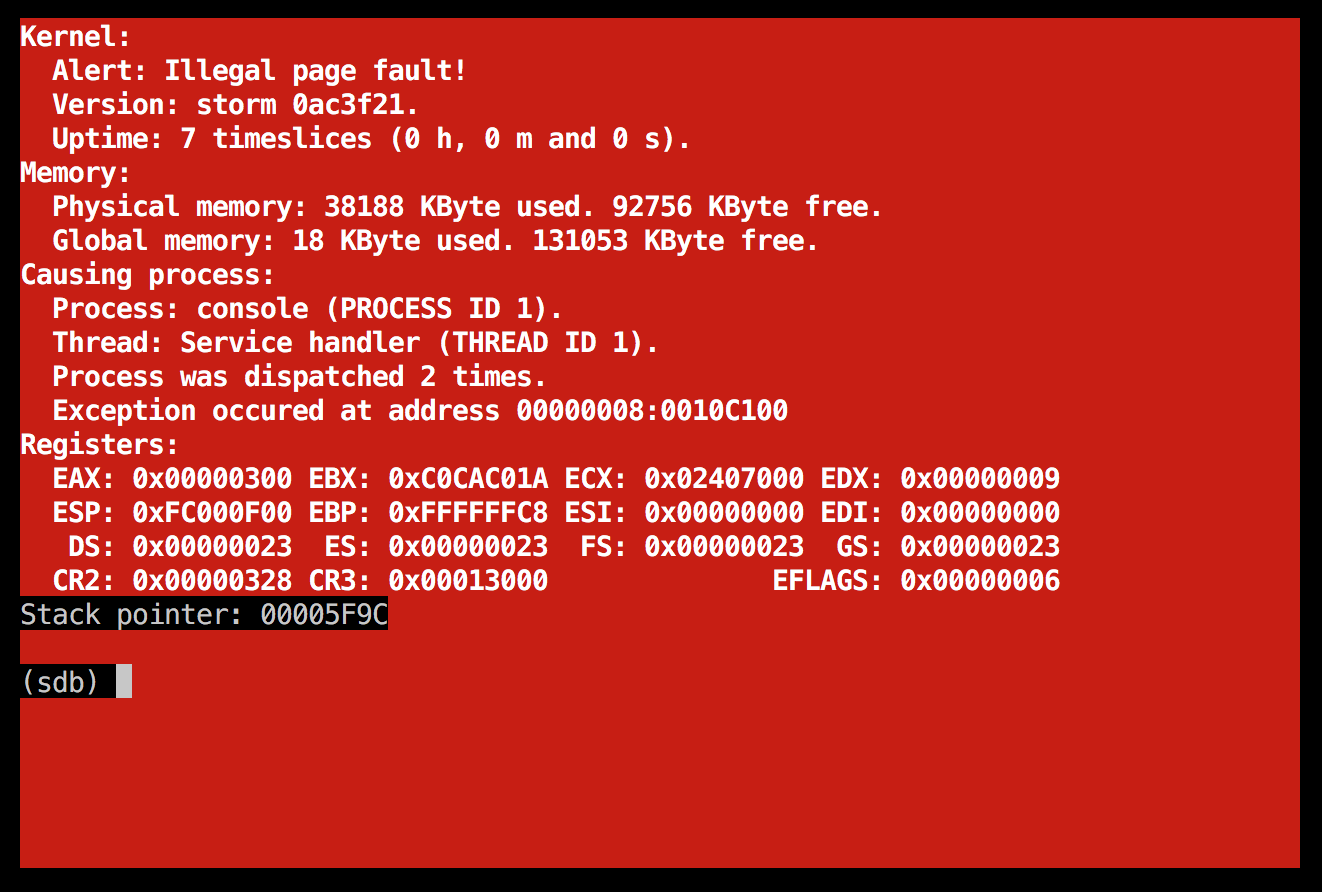

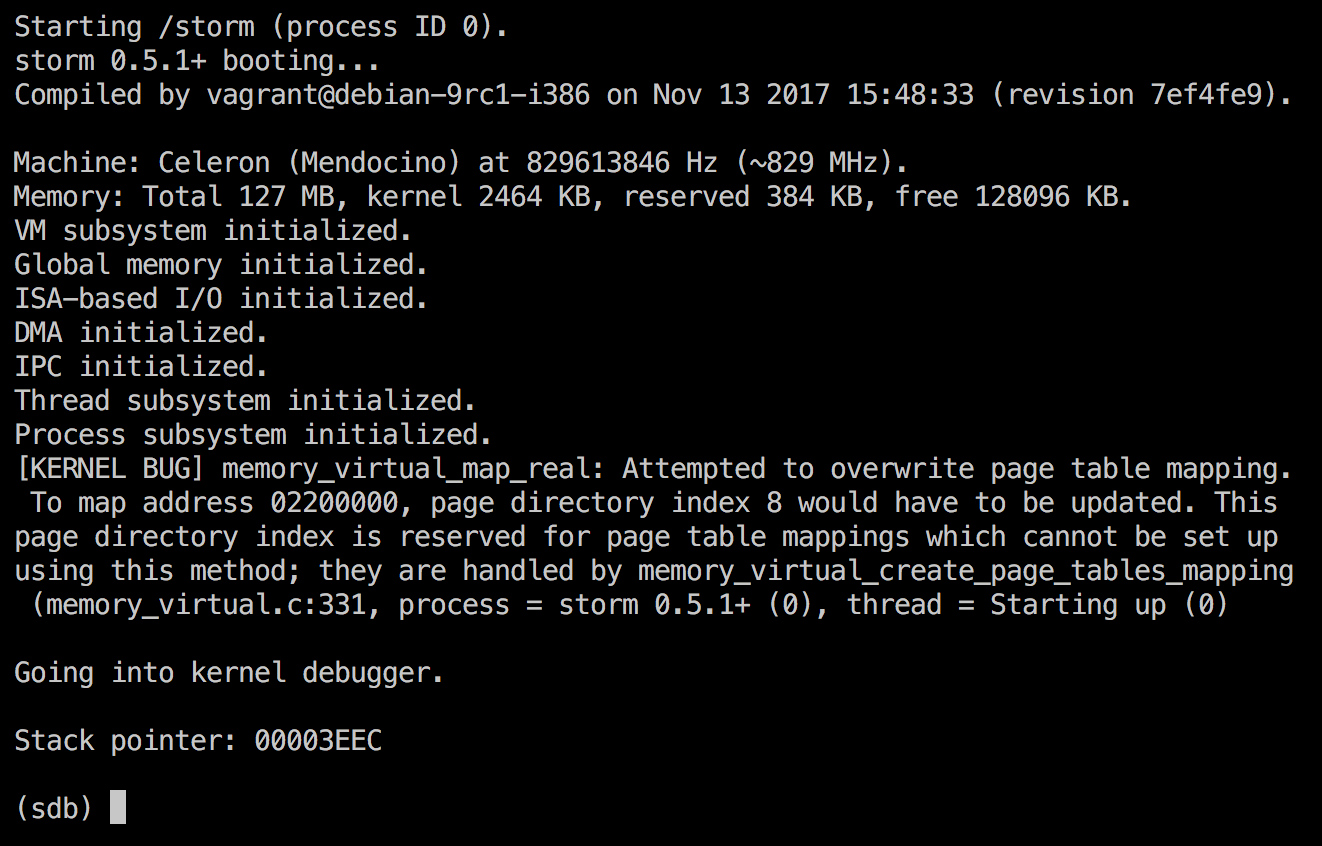

That gave me this on startup:

![]() As strange as it may seem, I actually consider this some progress. It behaves differently than before, so it probably got a bit longer this time, running into some new bug...

As strange as it may seem, I actually consider this some progress. It behaves differently than before, so it probably got a bit longer this time, running into some new bug...

The code that was failing looked like this (slightly reformatted), in memory_virtual_map_real:

page_table = (page_table_entry *) (BASE_PROCESS_PAGE_TABLES + (index * SIZE_PAGE));

// Which entry in the page table to modify.

index = (virtual_page + counter) % 1024;

// Set up a new page table entry.

page_table[index].present = 1;

page_table[index].flags = flags;

page_table[index].accessed = 0;

page_table[index].dirty = 0;

page_table[index].zero = 0;

page_table[index].available = 0;

page_table[index].page_base = physical_page + counter;

Going back into gdb once more

It wasn't so easy to get anything immediate out of this; I think I'll need to re-launch the debugger, this time breaking inside the boot server right at the place where it starts launching the programs.

Interesting; doing so made me find the failing line very quickly. boot.c around line 223 looked like this:

log_print_formatted(&log_structure, LOG_URGENCY_DEBUG,

"Allocating %u bytes for %s.",

directory_entry.size, programs[i]);

memory_allocate((void **) buffer_pointer, directory_entry.size);

directory_entry.size was 211260, that I had already seen in the debugger. But why was the memory allocation failing? This seemed to indicate some very annoying kernel bug. Maybe we should just port the servers to run on top of Linux instead, as I have been thinking. ![]() Debugging kernel bugs can be extremely painful and demotivating sometimes... If we would be running on top of a minimal, stripped-down Linux kernel (with all file systems, device drivers etc disabled), we wouldn't have to waste time trying to debug things like this.

Debugging kernel bugs can be extremely painful and demotivating sometimes... If we would be running on top of a minimal, stripped-down Linux kernel (with all file systems, device drivers etc disabled), we wouldn't have to waste time trying to debug things like this.

I remembered reading something somewhere about memory deallocation being disabled, and if it is enabled the system can be unstable. I looked and found that flag in memory_global.c in the kernel; however, it was disabled so it can't really be the problem here.

I had no better idea than to step into the kernel and looking at the problem more in detail... I quite easily managed to step into the kernel code (file storm/x86/storm in gdb to switch the current executable, then stepi until I managed to get into the kernel, PL0 mode.

memory_allocate (address=0xffffff7c, pages=52, cacheable=1) at memory.c:30

30 {

As indicated previously, the allocation of memory was working:

memory_physical_allocate(&physical_page, pages, "User-level allocation");

...but the actual mapping of this memory was causing the problems:

memory_virtual_map(page_number, physical_page, pages, flags);

I noted while stepping through the kernel code that the stepping seemed "jumping" around, so now was the time to enable -O0 here also, i.e. disable compiler optimizations since they complicate debugging. I also tweaked some of the code to make it easier to understand in the gdb context (note to my dear readers: do not overload the meaning of a variable inside a method to mean different things, even though it can feel like a handy micro-optimization. It makes it harder to watch the value changing while debugging, for example.)

This is btw when conditional breakpoints come in handy:

(gdb) break memory_virtual_allocate if pages = 52